AI Ethics in Future Tech: Who Makes the Rules?

As artificial intelligence becomes deeply woven into the fabric of future technology, ethical questions around its development and deployment grow more urgent. Who holds the power to decide what's right or wrong for machines? This article explores the evolving landscape of AI ethics, the stakeholders shaping the rules, and the global efforts to ensure AI serves humanity fairly and responsibly.

✨ Raghav Jain

The Rise of AI and the Ethical Imperative

The advancement of artificial intelligence (AI) is not just a technological revolution; it is a philosophical, ethical, and societal transformation. As AI begins to outperform humans in tasks like pattern recognition, decision-making, and even creative problem-solving, it no longer merely serves as a tool but increasingly acts as an autonomous agent influencing lives, economies, and geopolitical balance.

With AI systems being deployed in law enforcement, healthcare, military operations, finance, and social media, the stakes have never been higher. These systems can automate decisions about who gets a job, who gets bail, or who is targeted in a drone strike. They may be trained on biased data or manipulated to serve certain political or economic agendas. Given this power, the fundamental question arises: who decides what’s ethical in AI? Who makes the rules?

What Does AI Ethics Really Mean?

AI ethics refers to the field of study and practical guidelines concerned with ensuring that AI technologies are developed and deployed in ways that are fair, safe, transparent, accountable, and respectful of human rights. Key principles include:

- Transparency: Can decisions made by AI be explained?

- Fairness: Does the AI avoid bias and discrimination?

- Privacy: Are data rights protected?

- Accountability: Who is responsible when AI makes a mistake?

- Autonomy: Does AI support or undermine human control?

These questions become increasingly complex as AI systems learn and evolve independently, sometimes developing emergent behaviors that even their creators cannot fully predict or control.

Stakeholders in AI Ethics: Who Makes the Rules?

1. Governments and Regulators

Government bodies have traditionally been the rule-makers in society, and AI is no exception. National and regional efforts are growing:

- The EU’s AI Act: The European Union has led with a regulatory framework categorizing AI systems based on risk—banning certain high-risk applications and imposing strict standards for others.

- China’s AI Governance: China focuses on state control and AI development within its national strategic interests, emphasizing security, social harmony, and censorship.

- The U.S. Approach: The U.S. has taken a more decentralized and industry-driven approach, with agencies like the FTC and NIST issuing guidelines rather than enforceable laws.

However, challenges remain in enforcement, international cooperation, and keeping regulations up to date with fast-moving technology.

2. Technology Companies

Tech giants like Google, Microsoft, Meta, and OpenAI are at the forefront of AI development. They often create their own ethical guidelines, internal review boards, and responsible AI teams. Notable examples include:

- Google’s AI Principles after its work with the U.S. military on Project Maven.

- OpenAI’s Charter, which pledges to ensure AGI (Artificial General Intelligence) benefits all of humanity.

- Microsoft’s Responsible AI Standard, focusing on accountability and human-centered design.

However, these companies often face conflicts of interest between ethical responsibility and profit motives. Self-regulation, while necessary, is not always sufficient.

3. International Organizations

Entities like the United Nations, OECD, and IEEE are working on international ethical frameworks to encourage global cooperation. For instance:

- The UNESCO AI Ethics Recommendation outlines global ethical standards.

- The OECD AI Principles were adopted by more than 40 countries, focusing on inclusive growth, human rights, transparency, and robustness.

But enforcement remains limited, especially in authoritarian regimes or highly competitive global economies where AI development is tied to national security.

4. Civil Society and Academia

Academics, ethicists, human rights organizations, and the public play a crucial role in influencing AI ethics by:

- Conducting independent audits and research.

- Highlighting algorithmic bias (e.g., facial recognition inaccuracies).

- Lobbying for ethical policies and data rights.

Organizations like the Electronic Frontier Foundation (EFF) and Algorithmic Justice League advocate for transparent and accountable AI development, especially to protect marginalized communities.

5. The Public

As end-users and data sources, the public indirectly influences AI ethics. Their values, voting choices, and purchasing power affect regulatory pressure and company practices. However, many people are unaware of the ethical implications of AI or lack the literacy to engage meaningfully in debates.

Key Ethical Dilemmas in Future AI

a) Bias and Discrimination

AI trained on biased datasets can perpetuate racial, gender, or socioeconomic discrimination. In predictive policing, facial recognition, or hiring algorithms, this can reinforce systemic inequality.

b) Privacy and Surveillance

AI systems used in surveillance, whether by governments or corporations, raise red flags about personal freedoms and mass data collection—often without consent.

c) Weaponization of AI

AI in autonomous weapons (killer robots) poses existential risks. Should machines be allowed to make life-and-death decisions? The lack of clear global treaties exacerbates the risk of an AI arms race.

d) Deepfakes and Misinformation

AI-generated content (deepfakes, fake news, manipulated videos) can disrupt democracies, damage reputations, and erode trust in digital media.

e) Job Displacement and Economic Inequality

As AI automates tasks across sectors, who bears responsibility for retraining workers or managing income inequality? Ethical deployment of AI must consider economic impacts.

Can Ethical AI Truly Be Achieved?

Achieving ethical AI is not about finding one set of rules but creating a flexible, evolving, and participatory governance model. It involves:

- Multi-stakeholder collaboration: Governments, companies, civil society, and the public must work together.

- Global cooperation: AI development is transnational; so should be its ethical oversight.

- Built-in ethics: Ethics must be embedded in design, not tacked on later.

- Transparency and audits: Independent reviews can help ensure accountability.

- Education and awareness: A more informed public can demand better policies and corporate behavior.

The rise of artificial intelligence (AI) has revolutionized nearly every aspect of human life—from healthcare and finance to transportation, education, and even art—ushering us into an age where machines not only assist but also make decisions, solve problems, and influence the human condition. As AI systems become more autonomous and embedded in critical systems, the issue of AI ethics—essentially, the question of how we ensure that machines act in ways aligned with human values—has emerged as one of the most pressing debates of our time. The central question remains: who makes the rules for AI? The answer is complex, as multiple stakeholders share the responsibility. Governments and regulators play a foundational role, with initiatives such as the European Union’s AI Act classifying systems based on their risk level and banning applications that threaten civil liberties. In contrast, countries like the United States have historically favored industry-led innovation over preemptive regulation, while China advances AI under tight state control, prioritizing surveillance, national strategy, and social governance. Technology companies like Google, Microsoft, Meta, and OpenAI also shape the ethical landscape significantly, developing in-house ethical frameworks and AI principles to guide product development—yet these are not immune to conflicts of interest when profits clash with principles. For instance, Google's infamous withdrawal from Project Maven highlighted the tension between employee ethics and defense contracts. Meanwhile, organizations like OpenAI adopt mission statements prioritizing humanity’s collective interest in artificial general intelligence (AGI), yet critics argue that these charters, while visionary, lack enforceability. International bodies like the United Nations, OECD, and UNESCO are developing global standards for AI ethics, but the absence of binding treaties and the divergent values of participating nations often hinder meaningful cooperation. Civil society—including NGOs, academic researchers, whistleblowers, ethicists, and independent auditors—also contributes substantially, especially in exposing algorithmic bias and advocating for underrepresented communities. Public voices are rising in protest against facial recognition in public spaces, predictive policing tools that reinforce racial profiling, and the lack of transparency in social media algorithms. Ethical concerns grow further in domains such as surveillance, where AI is used to track citizens en masse, often without their knowledge or consent; in the military, where lethal autonomous weapons pose an existential question about whether machines should have the power to kill; and in social manipulation, where deepfakes and algorithmically amplified misinformation threaten democratic processes and societal trust. Moreover, as AI replaces human labor in industries ranging from customer service to logistics and even creative writing, the ethical implications of job loss and economic disparity cannot be ignored. Fairness, transparency, accountability, privacy, safety, and human oversight have become the core pillars of AI ethics. Yet embedding these into complex, self-learning systems is a daunting task. Machine learning models are often “black boxes,” making it hard to trace how they reach conclusions; even developers sometimes can’t explain their systems’ behaviors. Worse still, these systems often inherit and amplify societal biases encoded in the training data, leading to discrimination against minorities in healthcare, housing, hiring, and criminal justice. Ethical failures are not hypothetical; they are real and already here. For example, a healthcare algorithm in the U.S. was found to provide worse recommendations for Black patients than white patients with the same health conditions, because it used historical cost of care as a proxy for need—perpetuating systemic inequities. So, who ensures such mistakes are prevented or corrected? The answer lies in layered responsibility. Governments must create clear, enforceable rules; companies must be transparent and proactive; civil society must remain vigilant; and international collaboration must promote a baseline of ethical standards across borders. Yet another essential piece of the puzzle is public literacy. Most people still don’t understand how AI works or how it affects their lives, making them vulnerable to manipulation and unable to contribute meaningfully to ethical discourse. Thus, investing in public education about AI is just as important as designing the systems themselves. Achieving ethical AI is not a one-time effort but an ongoing, evolving mission that requires multi-stakeholder governance, inclusive design, cultural sensitivity, and technological humility. It is not just about creating intelligent machines but about understanding the kind of society we want to live in and ensuring that AI serves those goals rather than undermines them. In the end, the question “Who makes the rules?” is not about picking one ruler but about creating a collaborative, transparent, and participatory framework where no one entity has unchecked power. The ethical future of AI lies not in the hands of coders or lawmakers alone, but in the collective will of global society to balance innovation with integrity, efficiency with empathy, and progress with principles.

While the concept of AI ethics sounds straightforward—ensuring that artificial intelligence behaves in a way that aligns with human values and legal norms—in practice, implementing such ethics across global societies is deeply complex and often inconsistent due to cultural differences, geopolitical interests, technological gaps, and economic incentives that shape how rules are created, interpreted, or enforced. This complexity is further amplified by the global, borderless nature of AI systems, where an algorithm developed in Silicon Valley can be deployed in Nairobi, analyzed in Singapore, and abused in Moscow, raising the fundamental question: can ethical standards be universal, or must they remain regionally adaptable? Many argue that a universal baseline—emphasizing human rights, transparency, and non-maleficence—must be agreed upon internationally, similar to climate agreements or humanitarian laws, yet history has shown that nations often prioritize sovereignty over shared accountability, especially when emerging technologies offer a strategic edge in global competitiveness. This becomes particularly relevant when discussing AI's military applications, where states are reluctant to bind themselves to limitations that adversaries might ignore. For example, autonomous drones, facial recognition surveillance, and AI-enhanced cyber tools are areas where ethical restraint may be seen as weakness. In response, multilateral efforts by the United Nations, including calls for a global treaty banning lethal autonomous weapons systems, have faced resistance from major powers with strong defense-tech industries. Meanwhile, technology companies hold immense sway in the ethical application of AI because they not only build the systems but also control the data pipelines, computing power, and talent that fuel these models. Their influence often outpaces that of governments, especially in countries with weak regulatory mechanisms. Many firms tout AI ethics teams and publish responsible AI guidelines, but critics often call these efforts performative—designed more for public relations and investor reassurance than true accountability. For instance, several major tech firms have disbanded or defunded internal ethics teams after internal conflicts or whistleblower reports emerged, undermining public trust. Moreover, without legal compulsion, there’s little incentive for companies to invest in transparency, especially when revealing algorithmic operations might expose trade secrets or legal risks. This raises another thorny issue: explainability. AI systems, especially those using deep learning, operate as black boxes, with decision-making pathways so complex and non-linear that even their creators cannot fully explain how specific outputs are derived. In high-stakes environments like healthcare, criminal justice, and finance, this opacity makes it difficult to ensure fairness or detect harm until it’s too late. Worse still, many AI models are trained on historical data filled with human biases—racism, sexism, economic inequality—which the system learns and perpetuates unless explicitly corrected. A notable example includes hiring algorithms that downgraded resumes with female-coded language or predictive policing tools that sent more patrols to neighborhoods with higher arrest records, reinforcing patterns of over-policing in minority communities. Ethical AI demands rigorous auditing, bias testing, and correction mechanisms, yet few nations have enforced laws mandating these processes. The European Union’s AI Act aims to be the first comprehensive legal framework addressing such issues by requiring transparency, documentation, and human oversight, especially for high-risk AI systems. Still, it faces challenges related to scope, enforcement, and technological neutrality. In the absence of comprehensive global law, civil society organizations and academic institutions step in as watchdogs and thought leaders. Groups like the Algorithmic Justice League and researchers at MIT, Stanford, and the University of Toronto have helped uncover bias and advocate for ethical reforms. Whistleblowers have also played pivotal roles in alerting the public to ethical breaches—from privacy violations to manipulative design in social media algorithms. Yet, even these voices are often marginalized or punished within their institutions. Moving forward, it is essential that the public is not left out of the ethical equation. Citizens must be educated and empowered to understand how AI affects their rights, behaviors, and choices, from the ads they see online to the loans they receive, or even the news they consume. Digital literacy, therefore, is a foundational pillar for meaningful AI ethics, because informed public pressure is one of the strongest forces for accountability. Policies must not be made in closed rooms by technical elites; they must involve inclusive dialogue with marginalized communities, labor unions, ethicists, and non-experts who are often the subjects—not designers—of AI systems. At the same time, governance must be agile enough to keep pace with the technology. Unlike traditional policymaking cycles, which move slowly, AI evolves rapidly, with new capabilities—like large language models or autonomous agents—emerging faster than regulators can understand, let alone control. This demands experimental regulatory approaches like algorithmic sandboxes, public algorithm registries, or independent certification bodies, much like what exists in the medical or aviation industries. Transparency reporting, akin to nutrition labels for algorithms, may become a norm in the future, empowering users to see what data was used, who built the model, and what risks it poses. We must also embrace the idea of “ethics by design,” embedding values into the architecture of systems rather than tacking them on afterward. This involves diverse teams in development, robust user testing, red-teaming to explore malicious use cases, and scenario planning to anticipate societal impacts. Ultimately, AI ethics is not just a matter of rules or principles; it is about power—who holds it, who benefits from it, and who is harmed by it. If left unchecked, AI could reinforce existing inequalities, concentrate power in the hands of a few, and undermine democratic structures. But if steered wisely, it can enhance human potential, democratize access to knowledge and services, and solve problems at scales never before imagined. The choice lies not with one group alone but with all of us—governments, companies, civil society, academia, and everyday people—collectively shaping the future of AI not as a force beyond our control, but as a reflection of our collective values, priorities, and humanity itself.

Conclusion

AI ethics is no longer a theoretical debate; it is a pressing global concern. As AI systems permeate decision-making across sectors, establishing ethical norms becomes critical to safeguard human rights, democracy, and safety. No single entity can—or should—own the rule-making process. Governments must regulate, companies must self-police, civil society must watchdog, and the public must stay informed.

The future of AI will be shaped not just by technological innovation, but by the moral compass we choose to follow.

Q&A Section

Q1:– What is AI ethics and why is it important?

Ans:– AI ethics is a set of principles that guide the responsible development and use of artificial intelligence. It's important to ensure fairness, transparency, accountability, and protection of human rights in systems that affect society.

Q2:– Who decides what’s ethical in AI?

Ans:– Multiple stakeholders decide: governments through laws, tech companies via internal policies, international organizations via global standards, and civil society by advocacy and public pressure.

Q3:– Why can’t we rely solely on companies to self-regulate AI?

Ans:– Because companies may prioritize profits over ethical considerations, and without external oversight, there is a risk of unethical or harmful practices going unchecked.

Q4:– What role does the public play in shaping AI ethics?

Ans:– The public can influence ethical AI through democratic participation, consumer choices, activism, and demanding transparency and accountability from governments and corporations.

Q5:– What are the biggest ethical challenges with future AI?

Ans:– Bias and discrimination, surveillance, job displacement, autonomous weapons, and the spread of misinformation via deepfakes are among the most pressing concerns.

Similar Articles

Find more relatable content in similar Articles

Digital DNA: The Ethics of Gen..

Digital DNA—the digitization a.. Read More

Protecting Kids in the Digital..

In an increasingly connected w.. Read More

Data Centers and the Planet: M..

As cloud computing becomes the.. Read More

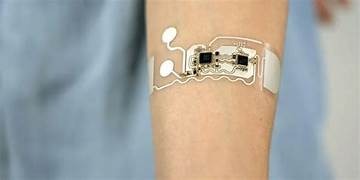

Wearable Health Sensors: The D..

Wearable health sensors are re.. Read More

Explore Other Categories

Explore many different categories of articles ranging from Gadgets to Security

Smart Devices, Gear & Innovations

Discover in-depth reviews, hands-on experiences, and expert insights on the newest gadgets—from smartphones to smartwatches, headphones, wearables, and everything in between. Stay ahead with the latest in tech gear

Apps That Power Your World

Explore essential mobile and desktop applications across all platforms. From productivity boosters to creative tools, we cover updates, recommendations, and how-tos to make your digital life easier and more efficient.

Tomorrow's Technology, Today's Insights

Dive into the world of emerging technologies, AI breakthroughs, space tech, robotics, and innovations shaping the future. Stay informed on what's next in the evolution of science and technology.

Protecting You in a Digital Age

Learn how to secure your data, protect your privacy, and understand the latest in online threats. We break down complex cybersecurity topics into practical advice for everyday users and professionals alike.

© 2025 Copyrights by rTechnology. All Rights Reserved.