AI Bias: Why It Happens and How to Prevent It

I bias arises when artificial intelligence systems produce unfair or discriminatory outcomes, often due to flawed data, algorithm design, or human error. It poses significant ethical challenges, especially in critical areas like hiring, law enforcement, and healthcare. Bias can be reduced by using diverse datasets, ensuring algorithmic transparency, and applying ethical frameworks during development. Involving diverse teams, monitoring models regularly, and implementing government regulations are also key to preventing harmful bias.

✨ Raghav Jain

Introduction

Artificial Intelligence (AI) is rapidly becoming an integral part of our lives—from the recommendations we receive on Netflix and Amazon, to facial recognition software, hiring tools, loan approvals, and even legal sentencing. With the rise of machine learning and data-driven decision-making, there's an increasing reliance on algorithms that seem objective and impartial. But here lies a significant concern—AI bias.

AI bias refers to systematic and repeatable errors in AI systems that create unfair outcomes, such as privileging one group over another. These biases are not due to a machine’s intention (because machines don’t have intentions), but rather due to the data and design choices made by humans. Understanding AI bias, its roots, and how to counteract it is essential to ensure AI remains fair, transparent, and beneficial for everyone.

This article explores the causes of AI bias, its real-world consequences, and effective strategies for prevention. AI bias, a pervasive and critical issue in the field of artificial intelligence, refers to the systematic and unfair skewing of AI systems towards certain outcomes or groups, often reflecting and amplifying existing societal inequalities. This phenomenon arises from various sources throughout the AI development lifecycle, from the data used to train the models to the design choices made by developers and the broader societal context in which these systems are deployed. Understanding the multifaceted reasons behind AI bias is paramount to effectively mitigating its harmful consequences and ensuring the development of fair, equitable, and trustworthy AI technologies. The implications of biased AI systems are far-reaching, impacting critical domains such as criminal justice, healthcare, finance, education, and employment, potentially leading to discriminatory outcomes and reinforcing societal disparities. Therefore, a comprehensive understanding of the origins of AI bias and the implementation of robust preventative measures are essential for responsible AI development and deployment.

One of the primary sources of AI bias lies within the data used to train these systems. Machine learning algorithms learn patterns and relationships from the data they are fed, and if this data is skewed, incomplete, or reflects existing biases, the resulting AI model will inevitably inherit and perpetuate these biases. Historical bias, for instance, occurs when training data reflects past societal prejudices or discriminatory practices. For example, if a hiring algorithm is trained on historical data where certain demographic groups were underrepresented in specific roles due to discriminatory practices, the algorithm may learn to unfairly disadvantage candidates from these groups in the future. Representation bias arises when certain subgroups within the population are underrepresented or entirely absent in the training data. This can lead to AI models that perform poorly or unfairly on these underrepresented groups simply because they haven't been adequately exposed to their characteristics and patterns. Measurement bias occurs when the data used to train the model does not accurately reflect the underlying phenomenon being measured. This can happen due to flawed data collection methods, biased sensors, or the use of proxies that do not accurately represent the intended variable. For instance, using arrest rates as a proxy for crime rates can introduce bias as certain communities may be disproportionately targeted by law enforcement. Sampling bias arises when the training data is not a random or representative sample of the population of interest, leading the model to learn skewed relationships. This can occur due to convenience sampling, self-selection bias, or other non-random data collection methods. Therefore, the quality, diversity, and representativeness of training data are crucial determinants of the fairness and equity of AI systems.

Beyond the data itself, biases can also be introduced during the model development process. Algorithm bias can occur when the design of the AI algorithm itself inherently favors certain outcomes or groups. For example, certain algorithmic choices or architectural designs might inadvertently optimize for performance on the majority group while underperforming on minority groups. Evaluation bias arises when the metrics used to evaluate the performance of the AI model are not equally applicable or fair across different groups. If a model performs well on average but exhibits significant disparities in accuracy or error rates across different demographic groups, relying solely on overall performance metrics can mask these biases. Aggregation bias occurs when a single model is applied to diverse subgroups within the population without accounting for their inherent differences. This can lead to suboptimal or unfair outcomes for certain subgroups whose specific characteristics are not adequately captured by the aggregated model. Interpretation bias can occur when the results of an AI model are interpreted in a way that reinforces existing stereotypes or prejudices. Even if a model is technically fair, biased interpretation of its outputs can lead to discriminatory decisions. Developer bias, stemming from the conscious or unconscious beliefs and assumptions of the AI developers, can also seep into the design choices, feature engineering, and evaluation processes. The lack of diversity within AI development teams can exacerbate this issue, as different perspectives and experiences might not be adequately considered. Thus, careful consideration of algorithmic design, evaluation metrics, model application, and the diversity of the development team are essential to mitigate biases introduced during the model building phase.

Furthermore, the broader societal context in which AI systems are deployed plays a significant role in the emergence and perpetuation of AI bias. Societal biases, ingrained in cultural norms, historical injustices, and systemic inequalities, can manifest in the data used to train AI models and influence the design and interpretation of these systems. Feedback loops can also amplify existing biases. For example, a biased AI system used in hiring might lead to fewer applications from underrepresented groups, which in turn reinforces the bias in the training data for future iterations of the model. The deployment environment and the way in which AI systems interact with the real world can also introduce or exacerbate biases. If an AI system is deployed in a context where certain groups are already disadvantaged, its decisions, even if seemingly neutral, can perpetuate or worsen these inequalities. The lack of transparency and interpretability in some AI models, particularly complex deep learning models, can make it difficult to identify and understand the sources of bias, hindering efforts to mitigate them. The "black box" nature of these models can obscure the decision-making process, making it challenging to pinpoint why certain biased outcomes are occurring. Therefore, addressing AI bias requires not only technical solutions but also a critical examination of the societal context and the ethical implications of AI deployment.

Preventing AI bias requires a multi-faceted and proactive approach throughout the entire AI lifecycle. Ensuring data quality and diversity is paramount. This involves careful data collection, cleaning, and augmentation techniques to create representative and balanced datasets. Addressing missing data and outliers, and actively seeking out and incorporating data from underrepresented groups are crucial steps. Implementing fairness metrics and constraints during model development can help to build models that are explicitly designed to minimize disparities across different groups. Techniques such as adversarial debiasing, which aims to remove discriminatory information from the data or model, and counterfactual fairness, which ensures that the same outcome would have occurred in a counterfactual world where sensitive attributes were different, can be employed. Ensuring transparency and interpretability of AI models, through techniques like explainable AI (XAI), can help to identify and understand the sources of bias in model decisions. Regular auditing and monitoring of AI systems after deployment are essential to detect and address any emerging biases or unintended consequences. Establishing clear ethical guidelines and regulations for AI development and deployment, along with fostering interdisciplinary collaboration between AI researchers, social scientists, ethicists, and policymakers, are crucial for promoting responsible AI innovation. Furthermore, promoting diversity and inclusion within AI development teams can bring a wider range of perspectives and help to identify and mitigate potential biases. Educating developers and stakeholders about the risks and implications of AI bias is also essential for fostering a culture of fairness and accountability in AI development. Ultimately, preventing AI bias requires a continuous and collaborative effort to ensure that AI systems are developed and deployed in a way that benefits all members of society and contributes to a more equitable future.

What is AI Bias?

AI bias occurs when an AI system reflects or amplifies human prejudices. These biases are embedded either in the training data, algorithmic logic, or through the human decisions involved in model creation. The result? AI systems that may discriminate based on gender, race, age, or other socio-economic factors.

For example:

- Facial recognition software that misidentifies Black faces more often than white ones.

- Hiring algorithms that downgrade resumes with female names.

- Healthcare prediction tools that under-prioritize Black patients' health needs.

These biases are dangerous because they can scale discrimination across millions of users quickly and invisibly.

Why Does AI Bias Happen?

AI bias doesn’t appear magically. It happens due to a variety of reasons—many of which are rooted in human error, oversight, or structural inequality. Let’s break down the primary causes.

1. Biased Training Data

AI learns by analyzing large datasets. If the data contains historical or social biases, the AI will absorb and replicate them.

Example:

If historical hiring data reflects gender bias (favoring men over women), then the AI trained on this data will continue to do the same.

2. Lack of Diversity in Development Teams

Homogeneous teams are less likely to detect or consider issues of bias that affect underrepresented groups. A team made up entirely of individuals from the same background might overlook problems affecting other communities simply because they aren’t part of that experience.

3. Skewed or Incomplete Data

Sometimes the data used to train an AI system is incomplete or not representative of the population it's supposed to serve. This causes the AI to perform poorly or unfairly for certain groups.

Example:

If a voice recognition AI is trained mostly on male voices, it might fail to understand female voices accurately.

4. Labeling Errors and Subjective Judgments

When data is labeled for AI training, human annotators add tags or categories. If these people carry unconscious biases, they can influence the dataset negatively.

Example:

A dataset labeling images of criminals might include racial stereotypes, skewing the AI’s future assessments.

5. Feedback Loops

In systems like predictive policing or search engine recommendations, the AI's decisions influence what data is collected in the future. This can create a feedback loop that reinforces existing bias.

Examples of AI Bias in the Real World

1. Amazon’s Hiring Tool

Amazon once developed an AI recruitment tool to shortlist resumes. The tool learned from past hiring decisions, which favored male candidates. Consequently, it began penalizing resumes that included the word "women’s," such as "women’s chess club captain."

2. COMPAS Algorithm in Legal Sentencing

The COMPAS algorithm was used in the U.S. to assess the likelihood of a defendant re-offending. Investigations found it disproportionately assigned higher risk scores to Black defendants compared to white ones with similar profiles.

3. Apple Credit Card

In 2019, users reported that Apple’s credit card gave significantly lower credit limits to women compared to men, even when they shared financial accounts. Apple blamed the algorithm, but the bias in output pointed toward deeper systemic issues.

Consequences of AI Bias

The impact of AI bias goes beyond technical glitches—it can cause real harm.

1. Discrimination and Inequality

AI can automate discrimination in hiring, lending, law enforcement, and healthcare. This could worsen systemic inequalities, especially if AI is seen as neutral or unquestionable.

2. Loss of Trust

As biased outcomes become more visible, public trust in AI systems decreases. People are less likely to use or support technology that they feel is unfair or unsafe.

3. Legal and Ethical Ramifications

Companies can face lawsuits and regulatory penalties for discriminatory AI. Beyond legality, there are ethical responsibilities that businesses and developers must uphold.

How to Prevent AI Bias

While completely eliminating bias may not be possible, there are several powerful strategies to reduce and manage it.

1. Diverse and Inclusive Development Teams

Including people from different backgrounds—gender, race, age, culture—helps identify blind spots early. Diversity promotes critical thinking and builds empathy into design.

2. Bias Audits and Fairness Testing

Just like software is tested for bugs, AI systems should be tested for bias. Third-party audits and continuous fairness evaluations can help detect problems before deployment.

3. Improve Data Quality

Using balanced, representative, and diverse data is key. Also, actively remove or correct biased or skewed information in datasets.

4. Transparency and Explainability

Build AI models that can explain how they reached a decision. This increases accountability and allows users to challenge or understand results.

5. Ethical AI Guidelines and Frameworks

Many organizations and governments are working to create ethical frameworks for AI. Following principles like fairness, transparency, accountability, and human oversight ensures AI is built responsibly.

6. Human-in-the-Loop Systems

AI should not replace human judgment entirely. Keeping humans involved in decision-making—especially for critical areas like healthcare, criminal justice, or finance—can act as a safeguard.

What Governments and Institutions Can Do

Governments and regulators play a crucial role in reducing AI bias.

1. Enact Legislation on AI Fairness

Laws like the European Union’s AI Act are pushing for responsible AI. Similar policies worldwide can enforce standards for transparency, accountability, and non-discrimination.

2. Support Research and Education

Investing in AI ethics education ensures that future developers understand the social impacts of their work. More academic research into fairness algorithms can help advance the field.

3. Require Algorithmic Impact Assessments

Before AI systems are deployed—especially in public services—organizations should be required to conduct impact assessments to gauge possible biases.

Future Outlook: Is Bias-Free AI Possible?

The dream of completely bias-free AI may be unrealistic because bias exists in society, and AI learns from human behavior. However, by acknowledging and actively working against these biases, we can build more fair, inclusive, and responsible systems.

With growing awareness, better tools for fairness detection, and collaboration between technologists, ethicists, and policymakers, the future holds promise for ethical AI development.

Conclusion

AI bias is not just a technical issue—it is a societal challenge that reflects our values, our data, and our decisions. The very tools meant to empower us can unintentionally reinforce discrimination if built carelessly. But the good news is: we have the power to change this.

Preventing AI bias starts with awareness, continues through inclusive design and transparent practices, and is sustained by ongoing education and regulation. If we want AI that truly benefits everyone, we must build it responsibly—from the data we feed it to the diverse minds that create it.

Let us move toward a future where AI enhances equity, instead of compromising it

Q&A Section

Q1: What is AI bias and why is it a concern?

Ans: AI bias refers to systematic and unfair discrimination in artificial intelligence systems. It’s a concern because biased algorithms can lead to unfair treatment of individuals based on gender, race, age, or other factors.

Q2: How does AI bias occur during data collection?

Ans: AI bias often starts with biased or unrepresentative data. If the training data reflects historical inequalities or lacks diversity, the AI system learns and replicates those biases.

Q3: What role does human involvement play in AI bias?

Ans: Humans design, train, and test AI systems. Their assumptions, errors, or biases can unintentionally influence how the AI behaves, contributing to biased decision-making.

Q4: Can algorithms themselves be biased?

Ans: Yes, if algorithms are designed or tuned improperly, they can prioritize certain outcomes over others, reinforcing stereotypes or excluding specific groups.

Q5: What are real-world examples of AI bias?

Ans: Examples include facial recognition systems that misidentify minorities, hiring algorithms that favor male candidates, and credit scoring systems that disadvantage certain demographics.

Q6: How can diverse datasets help reduce AI bias?

Ans: Using diverse and inclusive datasets ensures that AI systems are trained on a wide range of scenarios and individuals, leading to more balanced and fair outcomes.

Q7: What is algorithmic transparency and how does it help?

Ans: Algorithmic transparency means making AI decisions and processes understandable. It helps identify, audit, and correct bias, allowing users and developers to trust the system.

Q8: How can developers prevent bias during model training?

Ans: Developers can prevent bias by using fair algorithms, balancing datasets, conducting bias testing, and involving ethical guidelines during the AI development process.

Q9: What role do regulations play in managing AI bias?

Ans: Regulations and ethical frameworks can enforce accountability, require fairness audits, and protect individuals from discrimination caused by AI-driven systems.

Q10: Why is ongoing monitoring important for preventing AI bias?

Ans: AI systems evolve with new data. Continuous monitoring ensures that emerging biases are detected early and corrected, maintaining fairness over time.

Similar Articles

Find more relatable content in similar Articles

Protecting Kids in the Digital..

In an increasingly connected w.. Read More

Data Centers and the Planet: M..

As cloud computing becomes the.. Read More

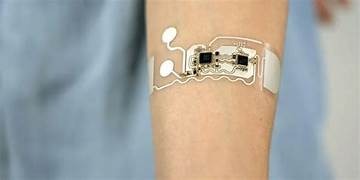

Wearable Health Sensors: The D..

Wearable health sensors are re.. Read More

Digital DNA: The Ethics of Gen..

Digital DNA—the digitization a.. Read More

Explore Other Categories

Explore many different categories of articles ranging from Gadgets to Security

Smart Devices, Gear & Innovations

Discover in-depth reviews, hands-on experiences, and expert insights on the newest gadgets—from smartphones to smartwatches, headphones, wearables, and everything in between. Stay ahead with the latest in tech gear

Apps That Power Your World

Explore essential mobile and desktop applications across all platforms. From productivity boosters to creative tools, we cover updates, recommendations, and how-tos to make your digital life easier and more efficient.

Tomorrow's Technology, Today's Insights

Dive into the world of emerging technologies, AI breakthroughs, space tech, robotics, and innovations shaping the future. Stay informed on what's next in the evolution of science and technology.

Protecting You in a Digital Age

Learn how to secure your data, protect your privacy, and understand the latest in online threats. We break down complex cybersecurity topics into practical advice for everyday users and professionals alike.

© 2025 Copyrights by rTechnology. All Rights Reserved.