Ethical Dilemmas in Robotics: Can Machines Make Moral Decisions?

Ethical dilemmas in robotics explore whether machines can make moral decisions, especially in fields like healthcare, defense, and autonomous transport. While robots can follow programmed ethical guidelines, they lack genuine understanding and consciousness. Challenges arise from biased data, unclear accountability, and complex situations like the Trolley Problem. Responsibility for robot actions lies with their creators, not the machines themselves. Laws, interdisciplinary collaboration, and et

✨ Raghav Jain

Introduction

As intelligent robots become increasingly integrated into our daily lives—driving our cars, assisting in surgeries, serving in the military, and helping in elder care—new ethical questions emerge that humanity has never faced before. These questions are not merely technical or legal; they are deeply moral. When a robot must make a life-or-death decision, who programs its values? Can a machine truly understand what is right or wrong? And more importantly—can robots make moral decisions?

The intersection of ethics and robotics is no longer theoretical. With the rapid progress in artificial intelligence (AI), machine learning, and autonomous systems, machines are being designed to perform tasks that involve moral reasoning. However, coding ethics into a machine is far more complex than programming mathematical functions or mechanical movements.

This article explores the ethical dilemmas in robotics, investigates whether machines can make moral decisions, and analyzes the philosophical, technological, and social implications of delegating ethics to artificial minds. The rapid advancement of robotics and artificial intelligence has ushered in an era where machines are no longer confined to performing simple, repetitive tasks, but are increasingly capable of making complex decisions in dynamic and unpredictable environments, raising profound ethical dilemmas about the very nature of morality and whether it can be encoded into machines; the core of this debate lies in the fundamental question of whether machines, which operate on algorithms and code, can truly possess the capacity for moral judgment, a concept traditionally associated with human consciousness, empathy, and a complex understanding of social context; traditional ethical frameworks, developed over centuries by philosophers and theologians, often rely on concepts like free will, intentionality, and the ability to understand the consequences of one's actions – attributes that are, at best, imperfectly replicated in current AI systems; one of the primary challenges in endowing robots with moral decision-making capabilities stems from the difficulty of defining and formalizing ethical principles in a way that can be translated into machine-readable code; human morality is nuanced, context-dependent, and often involves balancing competing values and principles, a process that is far from straightforward and often leads to disagreement even among humans; attempts to program ethical behavior into robots often involve either top-down approaches, where explicit ethical rules are hardcoded into the system, or bottom-up approaches, where machines learn ethical behavior from large datasets of human actions and moral judgments; top-down approaches, while seemingly straightforward, struggle to account for the inherent ambiguity and complexity of real-world ethical dilemmas, often leading to rigid and inflexible behavior that fails to adapt to novel situations; for example, a robot programmed with a strict "do no harm" rule might be unable to perform a necessary medical procedure that involves causing temporary pain, or a self-driving car might be paralyzed by conflicting priorities in an unavoidable accident scenario; bottom-up approaches, on the other hand, rely on machine learning algorithms to extract patterns and correlations from data, but these algorithms are susceptible to biases present in the data, potentially leading to robots that perpetuate or even amplify existing societal prejudices; furthermore, these approaches often lack transparency, making it difficult to understand why a robot made a particular decision, which can erode trust and accountability; the ethical dilemmas in robotics are particularly acute in situations where machines are required to make decisions with life-or-death consequences, such as in autonomous vehicles, surgical robots, or military applications; the "trolley problem," a classic ethical thought experiment, is often used to illustrate the challenges of programming moral choices into machines; in this scenario, a runaway trolley is heading towards a group of people, and a decision must be made whether to divert it onto another track, potentially saving those people but sacrificing others; while humans may struggle with this decision, they possess an intuitive understanding of the moral weight of their actions and can consider factors such as the intentions and potential consequences; for a machine, however, this decision must be reduced to a set of logical rules and calculations, raising questions about which factors should be prioritized and how to weigh competing values such as the preservation of life, the minimization of harm, and the respect for individual autonomy; the development of autonomous weapons systems (AWS) raises particularly alarming ethical concerns; these are robots capable of making decisions about who to target and kill without human intervention; the prospect of machines making life-or-death decisions on the battlefield raises profound questions about accountability, responsibility, and the very nature of warfare; critics argue that delegating such decisions to machines crosses a fundamental moral line, as it removes the human element of compassion, judgment, and the ability to understand the context of a situation; furthermore, the potential for errors, malfunctions, or unintended consequences in autonomous weapons systems could lead to catastrophic outcomes with devastating humanitarian consequences; beyond these life-or-death scenarios, ethical dilemmas in robotics also arise in more mundane but equally important contexts, such as in healthcare, where robots are used to assist with patient care, medication delivery, and even companionship; questions about privacy, informed consent, and the potential for emotional manipulation become increasingly relevant as robots become more integrated into our daily lives; for example, a care robot designed to provide companionship to elderly individuals might develop a strong bond with its charge, raising concerns about the robot's influence and the potential for it to exploit vulnerabilities; similarly, the use of robots in education and therapy raises questions about the potential impact on human development and the nature of human relationships; addressing the ethical dilemmas in robotics requires a multidisciplinary approach involving collaboration between engineers, computer scientists, ethicists, philosophers, policymakers, and the public; it is crucial to develop ethical frameworks, guidelines, and regulations that govern the design, development, and deployment of robots, ensuring that they are used in a way that aligns with human values and promotes the well-being of society; this process should involve open and inclusive dialogue, taking into account diverse perspectives and cultural contexts, to ensure that the ethical principles embedded in robots reflect a broad consensus; furthermore, ongoing research is needed to explore new approaches to machine ethics, such as the development of more sophisticated AI models that can better understand and reason about moral concepts, and the creation of mechanisms for ensuring transparency, accountability, and explainability in robotic decision-making; ultimately, the question of whether machines can make moral decisions is not simply a technological challenge, but a fundamental philosophical question that goes to the heart of what it means to be human; as we continue to develop increasingly intelligent and autonomous robots, we must grapple with the ethical implications of our creations and strive to ensure that these machines serve humanity's best interests, while upholding the values and principles that we hold most dear; the future of robotics depends not only on our ability to create increasingly sophisticated machines, but also on our ability to imbue them with a sense of ethical responsibility, a task that will require careful thought, ongoing dialogue, and a commitment to shaping a future where humans and robots can coexist in a just and harmonious society.

Understanding Ethics in the Context of Robotics

Before delving into dilemmas, it’s important to understand what we mean by ethics. In simple terms, ethics is the study of what is morally right or wrong. In humans, moral decisions are influenced by:

- Personal values and experiences

- Cultural norms

- Emotional intelligence

- Religious or philosophical beliefs

In contrast, robots lack consciousness, empathy, and subjective experience. They operate based on algorithms, rules, and datasets. So when we talk about “ethics in robotics,” we’re referring to two main concepts:

- Ethical Design of Robots – how humans create, program, and deploy robots responsibly.

- Machine Ethics – whether and how robots themselves can act ethically.

Both raise challenging questions about accountability, responsibility, and trust in an increasingly automated world.

Common Ethical Dilemmas in Robotics

As robots are given more autonomy, a variety of real-world ethical dilemmas arise across different industries:

1. Autonomous Vehicles and the Trolley Problem

Autonomous vehicles must often make split-second decisions in life-threatening situations. Consider the classic trolley problem: Should a self-driving car swerve to avoid hitting five pedestrians if it means killing its passenger?

Robots cannot “feel guilt” or “empathy.” Their decision-making is based on predefined rules or statistical models. But who decides those rules? Car manufacturers? Governments? Insurance companies?

2. Military Drones and Lethal Force

Autonomous drones can identify and attack targets with minimal human input. While this can reduce risks to soldiers, it raises major concerns:

- Can a machine decide when to kill?

- How does it differentiate between combatants and civilians?

- Who is responsible if an AI-controlled weapon causes unnecessary deaths?

This has led to debates around banning “killer robots” and demanding international regulation.

3. Healthcare and Medical Decisions

Robots are increasingly involved in medical diagnoses and surgeries. What happens if a healthcare robot misdiagnoses a patient, or prioritizes one life over another due to an algorithmic bias? Should robots decide how much care a terminally ill patient receives?

Ethical questions in healthcare include:

- Patient consent for robotic treatment

- Trust and bias in AI medical systems

- Accountability for robotic errors

4. Privacy Violations by Service Robots

Robots equipped with cameras, microphones, and sensors collect vast amounts of personal data. For example, home assistant robots or eldercare bots may capture private moments. This raises questions about:

- Consent and data usage

- Surveillance and monitoring

- Data security and breaches

5. Job Displacement and Economic Justice

While not a direct moral decision by a machine, the mass automation of jobs creates ethical issues around wealth distribution, unemployment, and social inequality. Do companies have a moral obligation to retrain displaced workers? Should governments provide universal basic income?

Can Machines Truly Understand Morality?

The idea of machine morality is a controversial and philosophical one. There are several reasons why robots may never fully grasp ethics in the human sense:

1. Lack of Consciousness

Robots don't possess consciousness, emotions, or intentions. Morality often involves empathy, remorse, and compassion—traits machines simply do not have.

2. Contextual Complexity

Moral decisions often depend on context, nuance, and cultural understanding. What is ethical in one society may not be in another. Encoding such flexible reasoning into rigid systems is extremely challenging.

3. Bias in Programming and Data

AI systems learn from human-created data. If that data includes biases (racial, gender, cultural), the robot's decisions will reflect them. A robot’s ethics are only as unbiased as the people who build and train it.

4. The Problem of Unpredictability

Robots operate using complex neural networks or probabilistic models. This can make their decision-making unpredictable or opaque. This lack of transparency is a major barrier to ethical trust.

Approaches to Teaching Ethics to Robots

Despite the challenges, researchers are exploring ways to embed ethics into intelligent machines. Some of the major approaches include:

1. Rule-Based Systems (Deontology)

These are based on fixed rules or laws, like Asimov’s famous “Three Laws of Robotics.” The robot follows clear “if-then” statements. However, rule-based systems often fail in complex scenarios where rules conflict.

2. Consequentialist Models (Utilitarianism)

Here, the robot evaluates the consequences of each action and chooses the one that results in the greatest overall good. But calculating “the greatest good” is highly subjective and may involve sacrificing individuals for the majority.

3. Machine Learning and Value Alignment

In this model, robots learn ethics by observing human behavior. The goal is to align a robot’s values with human values. However, human morality is not always consistent or logical, making this alignment tricky.

4. Hybrid Models

Some researchers suggest combining multiple ethical frameworks to create a more balanced system. For example, a robot could follow rules unless a utilitarian exception is required.

The Role of Human Oversight

Given the current limitations of machine morality, many experts emphasize the importance of human oversight. Even in highly autonomous systems, a human-in-the-loop approach is often recommended. This ensures that:

- Final decisions are approved by a human

- Accountability remains with the human operator or designer

- Robots are tools, not moral agents

Transparency in AI decision-making, known as explainable AI, is also crucial. Developers should ensure that robotic systems can justify their actions in understandable terms.

Legal and Policy Implications

Ethical dilemmas in robotics also have legal consequences. As intelligent robots become more involved in decision-making, legal systems must adapt to answer questions such as:

- Who is liable if a robot makes a harmful decision?

- Should robots have rights or legal status?

- How should data collected by robots be regulated?

Countries like the European Union are working on AI regulations to enforce transparency, fairness, and accountability. However, global cooperation will be essential, especially for areas like military robotics.

Religious and Philosophical Perspectives

Different cultures and religions may interpret robot ethics differently. For example:

- Some Eastern philosophies may see robots as part of the natural order.

- Western thinkers may emphasize human exceptionalism, arguing that machines can never truly be moral beings.

- Religious scholars may question whether giving moral authority to robots diminishes human responsibility or spiritual value.

These perspectives influence public trust and the willingness to adopt robotic technologies.

Real-Life Examples of Ethical Robotics Challenges

- Self-driving car accidents: Tesla's Autopilot system has been involved in multiple fatal crashes, raising questions about system responsibility.

- Robot caregivers in Japan: These bots are helping elderly people, but some argue that replacing human touch with machines could be ethically harmful.

- AI judges: In some countries, algorithms assist in legal sentencing. This raises concerns about fairness, bias, and lack of human empathy.

The Future: Ethical Frameworks for a Robotic World

As we move toward a future where robots are common in society, we must:

- Develop international ethical standards for robotic design and deployment.

- Ensure diverse and inclusive teams are involved in AI development to avoid bias.

- Encourage public discourse and education about machine ethics.

- Invest in interdisciplinary research combining technology, philosophy, law, and psychology.

Organizations such as the IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems are already working on guiding principles for ethical robotics.

Conclusion

The rise of intelligent robots has brought undeniable progress across many sectors, but it has also opened a Pandora’s box of ethical dilemmas. From the battlefield to the hospital, from your home to the courtroom, robots are being trusted with decisions that deeply affect human lives.

So, can machines make moral decisions? The answer is not a simple yes or no. While machines can be programmed to follow ethical rules or mimic moral behavior, true morality requires understanding, empathy, and accountability—qualities that are uniquely human.

Therefore, instead of asking machines to become moral beings, we must focus on building ethically responsible technologies guided by human values. It’s up to us to ensure that the robots of the future serve humanity with fairness, transparency, and care—because ethical machines begin with ethical humans.

Q&A Section

Q1: What are ethical dilemmas in robotics?

Ans: Ethical dilemmas in robotics arise when machines are faced with situations requiring moral judgment, such as choosing between two harmful outcomes or deciding human safety priorities.

Q2: Can robots truly understand right from wrong?

Ans: Robots cannot inherently understand right from wrong; they operate based on pre-programmed instructions and data, lacking consciousness, emotions, and moral reasoning like humans.

Q3: How are moral decisions programmed into robots?

Ans: Moral decisions are programmed using algorithms, ethical frameworks, and decision-making models designed by human developers, often based on utilitarian or rule-based ethics.

Q4: What is the Trolley Problem and how does it relate to robotics?

Ans: The Trolley Problem is a philosophical dilemma used to examine moral decision-making, often applied to robots like self-driving cars needing to choose between two harmful outcomes.

Q5: Are robots used in fields where ethical decisions are critical?

Ans: Yes, robots are used in healthcare, military, and autonomous vehicles where ethical decisions—like prioritizing lives or delivering care—are often critical and complex.

Q6: Who is responsible for a robot's unethical action?

Ans: Responsibility typically lies with the human creators, programmers, or organizations deploying the robot, as machines follow the logic and data they are given.

Q7: Can AI and robots be biased in decision-making?

Ans: Yes, if the data or algorithms used to train them are biased, AI and robots can replicate and even amplify those biases in their decisions.

Q8: What role do laws and regulations play in robot ethics?

Ans: Laws and regulations guide the ethical use of robots, ensuring safety, accountability, and fairness in how they are developed, programmed, and deployed.

Q9: How do ethicists and engineers collaborate in robotics?

Ans: Ethicists and engineers work together to design systems that consider moral outcomes, evaluate possible risks, and create guidelines for ethical behavior in machines.

Q10: What is the future of ethical decision-making in robotics?

Ans: The future may involve more advanced AI capable of ethical reasoning, but human oversight will remain essential to ensure robots act responsibly and align with societal values.

Similar Articles

Find more relatable content in similar Articles

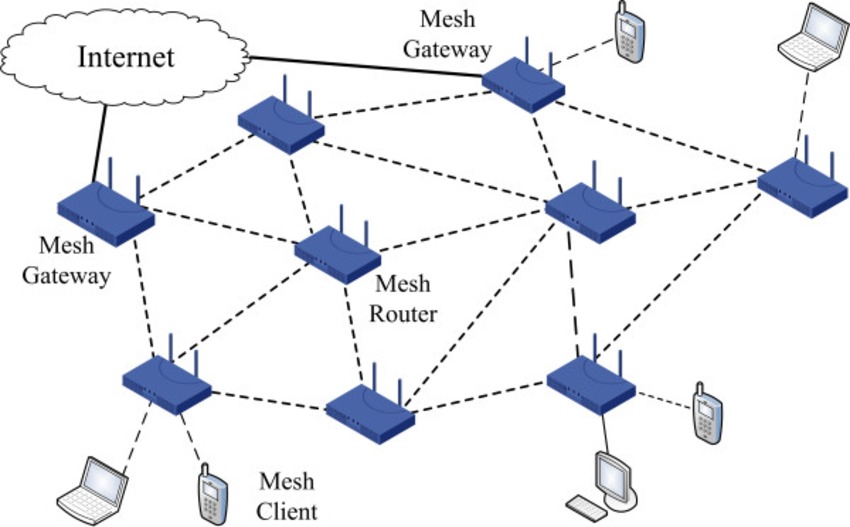

Why Mesh Networks Might Replac..

Mesh networks are transforming.. Read More

Foldable Screens in 2025: Are ..

Foldable screens have finally .. Read More

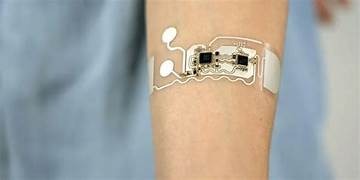

Wearable Health Sensors: The D..

Wearable health sensors are re.. Read More

How Blockchain Is Reinventing ..

Blockchain is transforming cyb.. Read More

Explore Other Categories

Explore many different categories of articles ranging from Gadgets to Security

Smart Devices, Gear & Innovations

Discover in-depth reviews, hands-on experiences, and expert insights on the newest gadgets—from smartphones to smartwatches, headphones, wearables, and everything in between. Stay ahead with the latest in tech gear

Apps That Power Your World

Explore essential mobile and desktop applications across all platforms. From productivity boosters to creative tools, we cover updates, recommendations, and how-tos to make your digital life easier and more efficient.

Tomorrow's Technology, Today's Insights

Dive into the world of emerging technologies, AI breakthroughs, space tech, robotics, and innovations shaping the future. Stay informed on what's next in the evolution of science and technology.

Protecting You in a Digital Age

Learn how to secure your data, protect your privacy, and understand the latest in online threats. We break down complex cybersecurity topics into practical advice for everyday users and professionals alike.

© 2025 Copyrights by rTechnology. All Rights Reserved.