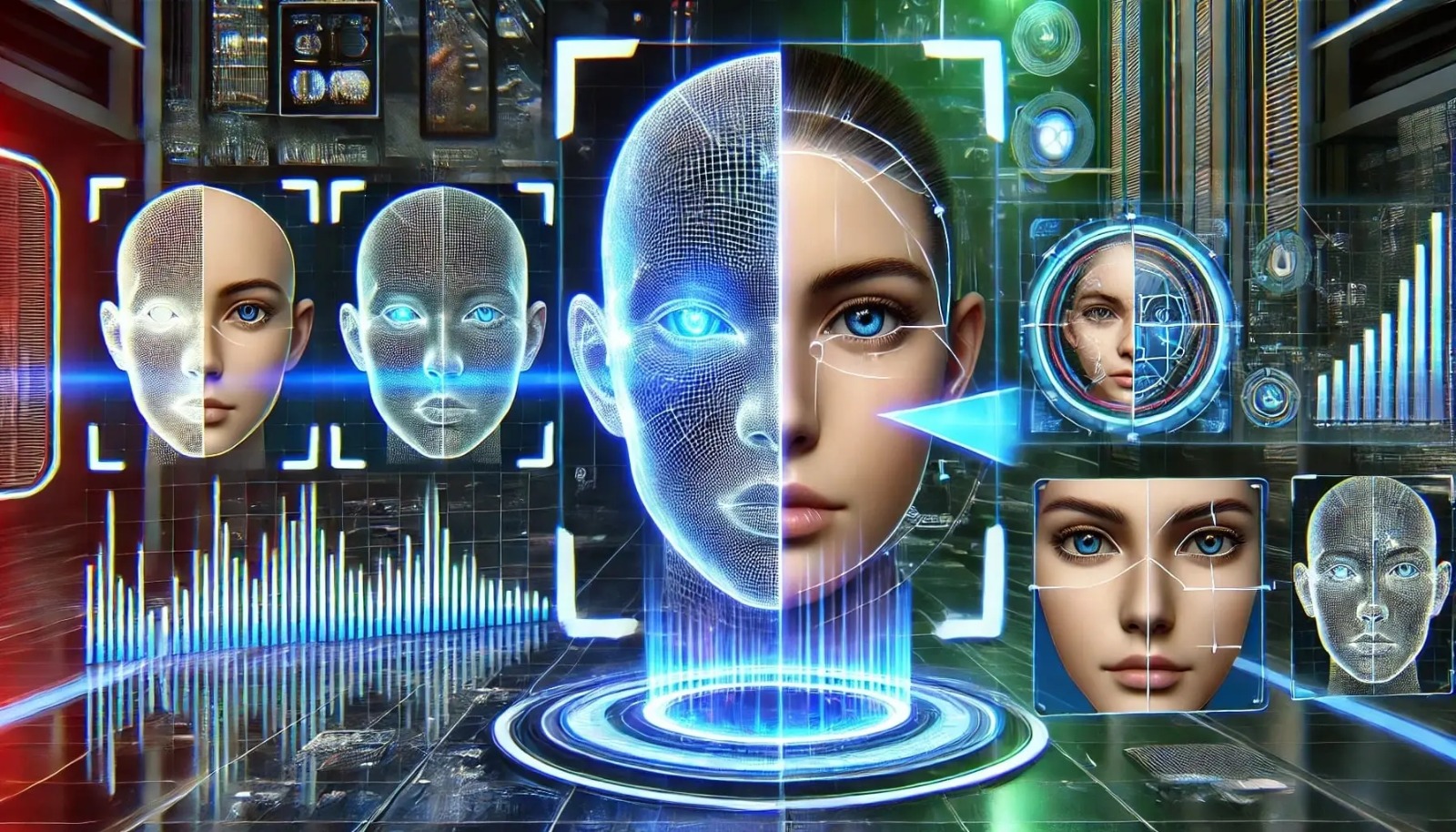

Deepfake detection vs creation arms race: tools, datasets, counterstrategies.

“Exploring the escalating arms race between AI-generated deepfakes and advanced detection technologies, this article examines the evolution of creation tools, datasets driving innovation, detection strategies, and emerging countermeasures, highlighting the societal, legal, and ethical implications of synthetic media while providing insight into how governments, researchers, and the public can navigate and mitigate the risks of hyper-realistic manipulated content in the digital era.”

✨ Raghav Jain

Introduction

In the last decade, artificial intelligence has made enormous strides in the field of synthetic media, particularly deepfakes. A “deepfake” refers to media—videos, audio, or images—that has been manipulated or entirely generated by AI to mimic real people. What began as an experimental use of generative adversarial networks (GANs) has now matured into a powerful, often controversial technology. Deepfakes can make celebrities appear in fake videos, clone voices for scams, or even generate entirely new digital humans.

As deepfake creation technologies advance, so too do the tools and strategies designed to detect them. This has led to a continuous “arms race” between creators and detectors. On one side, researchers, criminals, and artists are building increasingly realistic deepfake generation models. On the other, governments, tech companies, and academic institutions are developing detection algorithms, benchmark datasets, and counterstrategies.

This article explores the dynamics of this arms race, examining how deepfakes are created, how detection methods evolve, what datasets drive progress, and what counterstrategies are being deployed in the ongoing battle to safeguard truth in the digital age.

The Rise of Deepfake Creation Technologies

1. Generative Adversarial Networks (GANs)

At the heart of most deepfake systems is the GAN, introduced in 2014 by Ian Goodfellow. GANs operate by pitting two neural networks against each other: a generator that creates fake samples, and a discriminator that attempts to tell real from fake. Over time, the generator improves until the output is nearly indistinguishable from authentic media.

2. Beyond GANs: Transformers and Diffusion Models

Modern deepfake creation has moved beyond GANs to transformer-based models and diffusion architectures. Large language models for text-to-image and text-to-video generation (e.g., OpenAI’s Sora, Stability AI’s Stable Diffusion, and Meta’s Make-A-Video) now allow users to create highly convincing synthetic content with minimal expertise.

3. Tools for Deepfake Creation

- FaceSwap and DeepFaceLab remain widely used open-source tools.

- FakeApp introduced the concept of consumer-level face-swapping in video.

- Voice cloning software like Descript’s Overdub and ElevenLabs allow AI-generated speech that mimics human voices with frightening accuracy.

- AI avatar platforms such as Synthesia and Rephrase.ai provide commercial uses of synthetic humans for advertising and training.

While these tools have legitimate applications in entertainment, accessibility, and education, they also fuel malicious uses like misinformation campaigns, political manipulation, and financial fraud.

Deepfake Detection Technologies

As deepfake generation advances, so must detection techniques. Researchers employ a range of methods:

1. Visual Artifacts Analysis

Early deepfake detection relied on spotting obvious flaws: unnatural blinking, mismatched lighting, or blurry edges around faces. However, as models improved, these “low-hanging fruits” became insufficient.

2. Physiological Signal Detection

Since deepfakes struggle to replicate subtle human biological signals, detectors analyze aspects like:

- Eye movements and blinking patterns.

- Micro-expressions and facial muscle dynamics.

- Blood flow signatures detectable through slight skin color changes (remote photoplethysmography).

3. Frequency and Noise Analysis

AI-generated images often leave telltale traces in frequency domains. Fourier transform analysis can expose unnatural noise patterns invisible to the naked eye.

4. Deep Learning Detection Models

Machine learning models trained on massive datasets of real and fake videos have become the primary detection method. Convolutional neural networks (CNNs), recurrent networks, and transformer-based detectors can learn complex patterns that distinguish genuine from synthetic.

5. Blockchain and Watermarking

A proactive detection method involves embedding digital watermarks or blockchain-based authenticity certificates at the moment of media creation. This “digital provenance” approach helps confirm whether a video or image is unaltered.

The Role of Datasets in the Arms Race

The success of both creation and detection models depends on datasets. The more diverse and large-scale a dataset, the better the AI can generalize.

1. Key Deepfake Datasets for Detection

- FaceForensics++: One of the earliest and most widely used datasets containing manipulated videos.

- DFDC (Deepfake Detection Challenge) Dataset: Released by Facebook and partners, offering over 100,000 clips for training.

- Celeb-DF: Features high-quality celebrity face-swapped videos.

- DeeperForensics-1.0: Contains videos captured under varied conditions to mimic real-world scenarios.

2. Challenges with Datasets

- Bias: Most datasets use celebrity faces, limiting generalization.

- Obsolescence: Datasets quickly become outdated as generation methods improve.

- Ethical concerns: Consent and privacy issues emerge when compiling large-scale face datasets.

The creation-detection arms race means datasets must constantly evolve to stay relevant.

Counterstrategies in the Arms Race

Beyond detection tools and datasets, broader counterstrategies are emerging:

1. Multimodal Detection

Instead of relying on a single signal (like visual artifacts), detectors combine multiple modalities—visual, audio, metadata—to improve accuracy. For instance, mismatches between speech and lip movement can expose fakes.

2. Real-Time Detection

With live-streaming platforms under threat, researchers are developing real-time detection systems capable of flagging manipulated content within seconds of broadcast.

3. Legal and Policy Measures

Governments are introducing laws to curb malicious deepfakes:

- China requires synthetic media to include visible watermarks.

- The EU’s AI Act includes provisions on transparency of AI-generated media.

- In the U.S., various state laws criminalize malicious uses of deepfakes, particularly in political contexts.

4. Digital Provenance Frameworks

The Coalition for Content Provenance and Authenticity (C2PA)—a consortium including Adobe, Microsoft, and the BBC—is developing standards for embedding secure metadata into media to certify authenticity.

5. Public Awareness and Media Literacy

Educating users to critically evaluate digital content is an essential counterstrategy. Even the most sophisticated detectors cannot replace human skepticism.

The Ongoing Arms Race: Who’s Winning?

The deepfake battle remains a cat-and-mouse game:

- Creators innovate with better generative models—GANs evolving into diffusion, transformers, and multimodal AI.

- Detectors respond with improved algorithms—CNNs, transformers, and multimodal detection frameworks.

- Creators adapt again, training models specifically to fool detection systems.

This iterative cycle mirrors historical cybersecurity struggles (e.g., malware vs antivirus). Neither side achieves permanent dominance; rather, both evolve in tandem.

Deepfake detection vs creation has emerged as one of the most intense technological arms races of the 21st century, where the capabilities of artificial intelligence to generate hyper-realistic synthetic media are being met by equally sophisticated countermeasures designed to unmask them, and this ongoing struggle reflects the broader tension between innovation and misuse in the digital era. Deepfakes, powered originally by generative adversarial networks (GANs), can convincingly swap faces, clone voices, and fabricate entire scenes that appear authentic to the human eye, and as the technology has matured, it has expanded beyond GANs into transformer-based models and diffusion systems capable of generating images, audio, and video at unprecedented levels of realism; this leap has given rise to tools like DeepFaceLab, FaceSwap, FakeApp, and commercially polished platforms like Synthesia, ElevenLabs, and Rephrase.ai, which serve dual purposes—legitimate use in entertainment, marketing, and accessibility, but also darker applications in misinformation campaigns, non-consensual explicit media, financial scams, and political propaganda. On the opposing side of the spectrum, detection systems have grown from simple artifact-spotting to highly advanced approaches; at first, researchers relied on obvious flaws such as unnatural blinking, poor lip synchronization, or mismatched lighting, but these cues quickly vanished as generation improved, forcing detectors to turn toward physiological signals like eye movement irregularities, facial micro-expressions, and blood flow changes detectable through subtle color fluctuations, while others leveraged frequency domain analysis to identify irregular pixel noise or deep learning classifiers trained to pick up hidden inconsistencies. Today, state-of-the-art detection models utilize convolutional neural networks, recurrent layers, and transformers trained on massive datasets of real and manipulated content, but this very progress in detection is challenged by the evolution of generation models, which are trained specifically to bypass or fool detectors, thus keeping the cat-and-mouse game alive. In this battle, datasets play a critical role, acting as the fuel for both creators and detectors, and initiatives like FaceForensics++, the Deepfake Detection Challenge dataset released by Facebook and partners, Celeb-DF, and DeeperForensics-1.0 have provided the large-scale, high-quality manipulated media necessary to train detection algorithms, yet these datasets are never permanent solutions because they quickly become outdated as new generation methods arise, and they also face issues of bias since most rely heavily on celebrity faces, which may not represent the diversity of real-world subjects, along with ethical concerns about consent and privacy in using people’s likenesses. To supplement detection, counterstrategies have emerged, including multimodal approaches that combine audio, visual, and metadata signals to detect inconsistencies, real-time detection systems designed for live broadcasts where manipulated media could go viral instantly, and digital provenance frameworks such as blockchain-based certificates or watermarking systems that confirm authenticity at the moment of media creation; an example of this is the Coalition for Content Provenance and Authenticity (C2PA), spearheaded by Adobe, Microsoft, and the BBC, which is working on embedding secure metadata and watermarks into digital files so that end users can verify whether a video or image has been altered. At the policy level, governments have begun responding with laws and regulations: China now mandates visible watermarks on synthetic media, the European Union’s AI Act requires transparency about AI-generated content, and several U.S. states have passed legislation banning malicious deepfakes in political and pornographic contexts, all of which represent attempts to create accountability alongside technological safeguards. Yet the arms race dynamic remains difficult to escape, because as detection algorithms grow stronger, creators innovate new ways to evade them, just as malware evolves to bypass antivirus systems, and the balance shifts back and forth without a clear endpoint. Some experts argue that deepfake creation will always stay slightly ahead because generation models improve continuously, while detection lags until new datasets and algorithms catch up, but others believe that a layered defense combining AI-based detection, legal frameworks, media literacy, and provenance tracking could collectively tilt the balance toward mitigation even if total prevention is impossible. Public awareness is perhaps the most underrated but vital counterstrategy, since users who learn to question digital media, verify sources, and apply skepticism are less vulnerable to deception, and without this human layer of defense, even the best technological detectors may fail in real-world conditions. The broader implication of this arms race is not merely technical but societal: deepfakes challenge our very notion of truth, memory, and trust in institutions, and if left unchecked, they could contribute to an era of “information nihilism” where people believe nothing they see or hear online; yet at the same time, synthetic media holds promise for film, education, accessibility for people with disabilities, and creative industries if used responsibly. Thus, the deepfake arms race is not simply a struggle between good and bad actors but a test of our ability to integrate powerful AI technologies into society while minimizing their harms, and while the future will likely continue to see iterative advances on both creation and detection fronts, the “winner” may not be determined by who has the better algorithms, but by whether society as a whole can implement counterstrategies that combine technology, governance, and human critical thinking into a resilient ecosystem.

The ongoing arms race between deepfake creation and detection represents one of the most significant technological challenges of the modern digital era, as artificial intelligence advances enable the generation of hyper-realistic synthetic media while simultaneously demanding increasingly sophisticated methods to detect and mitigate these manipulations, and this cycle of innovation and counter-innovation has far-reaching implications for society, security, and trust in information. Deepfakes, which originated from the development of generative adversarial networks (GANs) by Ian Goodfellow and his colleagues in 2014, function by pitting two neural networks against each other: a generator, which creates synthetic content such as images, videos, or audio, and a discriminator, which evaluates the authenticity of this content, with each iteration improving the generator’s ability to produce convincing media, and over time, the technology has progressed from simple face-swapping to highly complex transformations capable of replicating intricate facial expressions, vocal inflections, and human gestures, while more recent models now incorporate transformer architectures and diffusion-based approaches, allowing for multimodal content generation and even text-to-video synthesis that can produce sequences never captured on camera, making the output increasingly difficult to distinguish from genuine footage. The accessibility of these tools, including open-source platforms like DeepFaceLab, FaceSwap, and FakeApp, as well as commercial solutions such as Synthesia, ElevenLabs, and Rephrase.ai, has democratized deepfake creation, enabling not only artists and marketers to explore new creative possibilities but also bad actors to produce misleading or malicious content for purposes ranging from political propaganda and financial fraud to harassment and identity theft, which has amplified the urgency for reliable detection methods capable of keeping pace with the rapid evolution of generation technologies. Early detection approaches relied heavily on identifying visual artifacts, such as irregular blinking patterns, inconsistent lighting, or subtle distortions around facial features, but as GANs and diffusion models became more sophisticated, these obvious markers became less prevalent, prompting researchers to explore alternative methods that analyze physiological signals, including micro-expressions, subtle head movements, eye gaze patterns, and even blood flow visible through slight skin color variations, a technique known as remote photoplethysmography, while others have turned to frequency domain analysis to detect anomalies in the noise patterns of AI-generated images that human eyes cannot perceive, and deep learning methods, particularly convolutional neural networks, recurrent neural networks, and transformer-based architectures, are now employed to identify complex and abstract features differentiating real media from synthetic, although their effectiveness is often limited by the quality, diversity, and relevance of the datasets used for training. Large-scale datasets are central to both creation and detection efforts, providing the examples necessary for AI models to learn realistic patterns or recognize manipulations, and notable examples include FaceForensics++, which offers manipulated videos using various popular techniques; the Deepfake Detection Challenge (DFDC) dataset, which contains over 100,000 clips to train high-performing detectors; Celeb-DF, designed with high-quality celebrity face-swapped videos; and DeeperForensics-1.0, which emphasizes diverse recording conditions to approximate real-world variability, yet these datasets are far from perfect, often biased toward certain demographics, limited in scope, or quickly rendered obsolete by advances in generation technology, raising ethical concerns regarding consent and privacy when compiling and using personal likenesses on a massive scale. The counterstrategies in the deepfake arms race extend beyond algorithmic detection, encompassing legal frameworks, digital provenance systems, and public education efforts aimed at increasing media literacy, as governments and organizations recognize that technological solutions alone are insufficient; China has implemented laws requiring visible watermarks on AI-generated content, the European Union’s AI Act mandates transparency regarding synthetic media, and various U.S. states have criminalized malicious deepfake use, particularly in political and pornographic contexts, while initiatives such as the Coalition for Content Provenance and Authenticity (C2PA), involving Adobe, Microsoft, and the BBC, are developing standards for embedding secure metadata and cryptographic verification into media files, allowing consumers to confirm authenticity and trace the origin of digital content, thus creating an additional layer of defense. Despite these measures, the arms race remains inherently dynamic, as deepfake generators evolve to circumvent detection, leveraging adversarial training techniques and continuously improving their realism, a situation analogous to cybersecurity battles between malware developers and antivirus software, where neither side achieves permanent dominance, but both adapt iteratively in response to the other’s innovations. Multimodal detection has emerged as a particularly promising approach, combining visual, audio, and metadata cues to identify inconsistencies, such as mismatches between lip movements and speech or temporal anomalies in video sequences, and real-time detection systems are being developed to monitor live streams, where malicious deepfakes could otherwise reach large audiences instantly, while public awareness campaigns aim to teach users to critically evaluate media, verify sources, and exercise skepticism, recognizing that human discernment is an essential complement to automated detection technologies. The implications of this arms race extend beyond technical considerations, challenging societal notions of trust, authenticity, and truth, as hyper-realistic deepfakes threaten to erode confidence in media, distort public perception, and create fertile ground for misinformation, yet the same technologies also offer immense potential for innovation, including virtual avatars for accessibility, educational content, entertainment, and interactive simulations, illustrating the dual-use nature of AI in media. Ultimately, the deepfake detection versus creation arms race illustrates that the struggle is not merely about achieving superior algorithms, but about developing a comprehensive ecosystem that integrates technological innovation, legal regulation, ethical standards, and societal awareness, as the ongoing cycle of AI advancement ensures that deepfake creation will continue to evolve, detectors will continuously adapt, and the responsibility to mitigate harm while leveraging benefits will rest on the collective efforts of researchers, policymakers, technologists, and the public, making the balance between creative freedom and protection against manipulation a defining challenge for the digital age.

Conclusion

The “deepfake detection vs creation arms race” is one of the defining technological battles of our time. Deepfakes have immense potential for creativity and innovation but also pose grave threats to democracy, security, and trust in information.

- Deepfake creation has evolved from GANs to diffusion and transformer-based models, producing near-flawless synthetic content.

- Detection has moved from spotting artifacts to sophisticated deep learning and multimodal analysis.

- Datasets like DFDC and FaceForensics++ are central to developing and benchmarking these tools, but face challenges of bias and obsolescence.

- Counterstrategies involve legal frameworks, digital provenance standards, and public media literacy.

Ultimately, the arms race will likely continue indefinitely. The best hope lies in combining technological, legal, and educational measures to mitigate harm while embracing legitimate uses of synthetic media. In this contest, the “winner” is not detection or creation, but society’s ability to responsibly manage and adapt to the realities of synthetic content.

Q&A Section

Q1: Why are deepfakes so convincing compared to traditional video editing?

Ans: Deepfakes leverage machine learning, particularly GANs and diffusion models, to learn complex patterns of facial expressions, speech, and movement. Unlike traditional editing, which stitches or overlays, deepfakes generate synthetic content pixel by pixel, making them more seamless and realistic.

Q2: What are the main risks of malicious deepfakes?

Ans: Malicious deepfakes can spread misinformation, damage reputations, manipulate elections, enable financial fraud (through voice cloning scams), and create non-consensual explicit content. Their realism makes them especially dangerous for trust in digital media.

Q3: How effective are current deepfake detectors?

Ans: While state-of-the-art detectors achieve high accuracy in lab settings, real-world performance drops due to varied video quality, compression, and new generation methods. Detection is improving, but no method is foolproof.

Q4: Can blockchain really help detect deepfakes?

Ans: Blockchain doesn’t detect deepfakes directly but helps confirm authenticity. By recording provenance data at creation, blockchain ensures users can verify whether media is original or altered, reducing the chance of successful deepfake deception.

Q5: Will deepfake creation always stay ahead of detection?

Ans: Likely yes, in cycles. As new creation methods emerge, detectors lag until updated. However, combining AI detection, legal safeguards, and media literacy can prevent widespread harm even if detection isn’t perfect.

Similar Articles

Find more relatable content in similar Articles

Wearable Health Sensors: The D..

Wearable health sensors are re.. Read More

Digital DNA: The Ethics of Gen..

Digital DNA—the digitization a.. Read More

Protecting Kids in the Digital..

In an increasingly connected w.. Read More

Data Centers and the Planet: M..

As cloud computing becomes the.. Read More

Explore Other Categories

Explore many different categories of articles ranging from Gadgets to Security

Smart Devices, Gear & Innovations

Discover in-depth reviews, hands-on experiences, and expert insights on the newest gadgets—from smartphones to smartwatches, headphones, wearables, and everything in between. Stay ahead with the latest in tech gear

Apps That Power Your World

Explore essential mobile and desktop applications across all platforms. From productivity boosters to creative tools, we cover updates, recommendations, and how-tos to make your digital life easier and more efficient.

Tomorrow's Technology, Today's Insights

Dive into the world of emerging technologies, AI breakthroughs, space tech, robotics, and innovations shaping the future. Stay informed on what's next in the evolution of science and technology.

Protecting You in a Digital Age

Learn how to secure your data, protect your privacy, and understand the latest in online threats. We break down complex cybersecurity topics into practical advice for everyday users and professionals alike.

© 2025 Copyrights by rTechnology. All Rights Reserved.