Algorithmic bias in non-Western contexts: datasets, representation, consequences.

"Exploring how AI systems trained predominantly on Western datasets fail to represent non-Western languages, cultures, and identities, resulting in widespread algorithmic bias that affects economic opportunities, healthcare, political surveillance, and cultural preservation, while highlighting the urgent need for localized datasets, participatory AI design, ethical governance, and decolonization of AI to create inclusive technologies for global equity."

✨ Raghav Jain

Introduction

Artificial Intelligence (AI) and machine learning technologies are often seen as neutral tools that function on logic, data, and statistical precision. Yet, beneath this perception lies a troubling reality: algorithms are only as objective as the data they are trained on, and this data is often riddled with biases that reflect systemic inequalities. The issue of algorithmic bias has been extensively discussed in Western contexts—where datasets frequently underrepresent marginalized groups or reinforce existing social hierarchies. However, in non-Western contexts, the problem is not only present but magnified. These societies often face compounded challenges: lack of localized datasets, dominance of Western-centric technological infrastructures, cultural misrepresentations, and weak regulatory frameworks.

This article explores the root causes of algorithmic bias in non-Western contexts, examining datasets, representation, and the far-reaching consequences of these biases on societies outside the Western world.

1. The Roots of Algorithmic Bias in Non-Western Contexts

1.1 Data Colonialism and Dataset Origins

Most state-of-the-art AI models are trained on massive datasets created and curated in the Global North. For example, large language models rely heavily on English-language content scraped from Western-centric platforms like Wikipedia, Reddit, or news sites. When these datasets are deployed in non-Western societies, they may fail to capture local cultural, linguistic, or historical realities.

This phenomenon has been referred to as data colonialism—a continuation of historical power imbalances where Western powers extract, classify, and exploit non-Western data with little reciprocity or representation. For example, African languages are grossly underrepresented in Natural Language Processing (NLP) corpora, with less than 0.1% of training data involving African dialects. This creates systems that fail to understand, translate, or process African cultural realities.

1.2 Linguistic Marginalization

AI-driven tools such as Google Translate or speech recognition systems often struggle with non-Western languages, dialects, and accents. South Asian languages like Hindi, Bengali, and Tamil are spoken by hundreds of millions, yet their digital presence is minimal compared to European languages. The result is poor-quality translations, errors in sentiment analysis, and digital exclusion for large populations.

1.3 Representation Gaps in Image and Video Datasets

Facial recognition algorithms notoriously perform worse on darker skin tones, particularly for women of color. This is not coincidental—Western image datasets like ImageNet and LFW (Labeled Faces in the Wild) are disproportionately filled with images of white subjects. In non-Western contexts, such biases translate to misidentification of African or Asian individuals, wrongful arrests, or even denial of essential services.

2. Representation Challenges Beyond Data

2.1 Cultural and Social Misrepresentation

Western-centric algorithms often impose Western categories of identity, gender, or behavior on non-Western societies. For instance, gender recognition systems trained on binary gender norms fail to account for Hijra communities in South Asia or third-gender categories in Indigenous cultures. Similarly, sentiment analysis tools may misinterpret local metaphors, sarcasm, or cultural expressions.

2.2 Exclusion of Local Knowledge Systems

Non-Western societies often have unique epistemologies, traditions, and social structures that are invisible in global datasets. For example, Indigenous ecological knowledge—critical for climate change adaptation—rarely enters AI training systems. When algorithms model “environmental risks,” they frequently exclude these alternative ways of knowing, thereby reinforcing a narrow Western scientific worldview.

2.3 Power Dynamics and Corporate Control

Most cutting-edge AI systems are developed by Western corporations like Google, Microsoft, and OpenAI. Their priorities shape what counts as “valid data” or “useful models.” Non-Western communities become consumers rather than active participants in shaping algorithms. This technological dependency can reinforce economic hierarchies, limiting non-Western nations’ sovereignty over their digital futures.

3. Consequences of Algorithmic Bias in Non-Western Contexts

3.1 Social and Economic Exclusion

Algorithmic bias can exacerbate pre-existing inequalities in developing nations. In hiring processes, automated résumé screening systems may undervalue candidates who do not conform to Western naming conventions or educational pathways. Similarly, credit-scoring algorithms may deny loans to individuals without Western-style financial histories, excluding millions from financial inclusion programs.

3.2 Political Surveillance and Authoritarianism

In some non-Western states, biased AI technologies are not just flawed but weaponized. Governments have deployed facial recognition and predictive policing systems—imported from Western firms—that disproportionately target minority communities. For instance, China’s surveillance technologies in Xinjiang have been widely criticized for enabling ethnic profiling against Uyghur Muslims.

3.3 Digital Erasure of Minority Cultures

When local languages, traditions, and cultural identities are absent from datasets, they risk being digitally erased. Younger generations interacting primarily with biased AI systems may internalize Western norms, leading to a gradual erosion of cultural diversity.

3.4 Health and Humanitarian Consequences

In healthcare, diagnostic AI systems trained on Western medical datasets often fail when applied to non-Western populations. For example, dermatology algorithms trained on lighter skin tones may not detect diseases on darker skin. Similarly, disaster prediction models that ignore local environmental knowledge may deliver inaccurate forecasts, costing lives in vulnerable communities.

4. Toward Solutions: Addressing Algorithmic Bias in Non-Western Contexts

4.1 Diversifying Datasets

Efforts must be made to include non-Western languages, images, and contexts in global datasets. Projects like Masakhane (for African NLP) and IndicNLP (for South Asian languages) are pioneering initiatives, but they require more international support and funding.

4.2 Participatory AI Design

Non-Western communities should not just be subjects of AI but active participants in its design. Community-based data collection ensures local realities are represented, while participatory governance models hold corporations accountable.

4.3 Policy and Regulation

Countries in the Global South need to develop AI ethics frameworks tailored to their unique cultural and social contexts. While the EU has launched an AI Act, most non-Western states lag behind in regulatory structures, leaving them vulnerable to exploitative technologies.

4.4 Decolonizing AI

A deeper structural change involves “decolonizing AI”—challenging Western dominance over knowledge systems, promoting local innovation, and recognizing diverse epistemologies. This means valuing Indigenous, African, Asian, and Latin American perspectives in AI development and ensuring they shape the narrative of future technologies.

Artificial Intelligence is often seen as a neutral tool, built on mathematics and logic, yet in reality it reflects the social, cultural, and political biases embedded in the data that feeds it, and in non-Western contexts this problem takes on even deeper and more damaging forms, because the majority of AI systems are trained on datasets created in the Global North, shaped by Western-centric perspectives, and heavily biased toward English and European languages, images, and categories of knowledge, meaning that when these systems are deployed in Asia, Africa, Latin America, or Indigenous communities, they fail to accurately recognize local realities, cultural norms, and linguistic diversity; this phenomenon has been described as “data colonialism,” a continuation of historical power imbalances where Western corporations and research institutions dominate global data flows and impose their models of classification, with non-Western societies relegated to passive users of technologies that were not designed for them, and the results can be exclusionary or even harmful, as seen in natural language processing tools that provide poor translations for South Asian or African languages, in sentiment analysis systems that misinterpret cultural metaphors or sarcasm, or in facial recognition algorithms that disproportionately misidentify darker skin tones and lead to wrongful arrests or denial of services; such problems are compounded by the fact that non-Western voices, histories, and epistemologies are grossly underrepresented in large-scale AI corpora, creating digital systems that not only misunderstand but actively erase cultural diversity, for example gender recognition systems trained on binary Western categories fail to account for third-gender communities such as the Hijra in South Asia or Indigenous two-spirit identities, and healthcare AI systems trained primarily on Western patients struggle to diagnose conditions in populations with darker skin or different genetic markers; beyond technical issues of accuracy, algorithmic bias in non-Western societies reinforces economic and political inequalities, excluding people from jobs when automated résumé screeners undervalue non-Western naming conventions or educational institutions, denying loans to millions without Western-style financial histories when credit scoring algorithms are applied, or reinforcing authoritarian surveillance regimes when facial recognition and predictive policing systems are deployed against minority groups, as in the case of Uyghur Muslims in Xinjiang, where AI has been weaponized to enforce political repression; in addition, algorithmic bias can cause digital erasure, as younger generations interacting with AI systems trained on Western content internalize Western cultural norms and lose touch with their own traditions and languages, while at the same time Indigenous ecological knowledge or community practices that could enrich scientific understanding of issues like climate change remain invisible to algorithmic models; what emerges is a double exclusion, where non-Western societies are deprived of fair access to technology and at the same time their cultural knowledge is excluded from shaping the global digital future; solving this problem requires a multifaceted approach that goes beyond superficial fixes, starting with diversifying datasets so that non-Western languages, faces, and contexts are properly represented, an effort being pioneered by projects like Masakhane for African NLP or IndicNLP for South Asian languages, though these remain underfunded compared to Western AI initiatives, and equally important is participatory AI design that involves local communities in the process of creating datasets and models, rather than treating them as mere data sources, because inclusion at the design stage ensures technologies reflect social realities and values on the ground; at the policy level, non-Western nations must develop their own AI ethics frameworks and regulatory structures to safeguard citizens, rather than relying on Western models like the EU AI Act that may not fit their local contexts, but currently, many countries in the Global South lack robust digital governance, leaving them vulnerable to exploitative technologies and deepening dependency on Western corporations; more fundamentally, scholars and activists argue for the “decolonization of AI,” which means not only diversifying datasets or regulating companies but also challenging the very dominance of Western epistemologies in shaping what counts as knowledge, science, and technological progress, and instead elevating Indigenous, African, Asian, and Latin American perspectives in building AI systems, because the danger is not simply that algorithms may misclassify people or mistranslate languages, but that entire worldviews and cultural systems are marginalized in the digital future; therefore, algorithmic bias in non-Western contexts is not just a technical flaw but a systemic issue rooted in global power imbalances, one that perpetuates economic exclusion, political repression, and cultural erasure unless actively addressed through inclusive design, localized innovation, ethical governance, and recognition of diverse human experiences as equally valid contributions to AI knowledge and practice.case studies/examples), or keep it tight at 1000 words?

Artificial Intelligence (AI) is often portrayed as a neutral, objective tool built on mathematics, logic, and large-scale data processing, yet in reality it is deeply shaped by the social, cultural, and political biases embedded in the datasets it is trained on, and these biases become especially pronounced in non-Western contexts where the majority of AI systems rely on datasets and models created in the Global North, reflecting Western-centric languages, images, and categories of knowledge, which means that when these systems are applied in countries across Africa, Asia, Latin America, or Indigenous territories, they frequently fail to recognize local cultural norms, linguistic diversity, and social realities, producing outputs that are inaccurate, exclusionary, or even harmful; this phenomenon is part of what scholars term “data colonialism,” a modern extension of historical power imbalances in which Western corporations, academic institutions, and technology developers dominate the collection, classification, and monetization of data from non-Western societies, while the latter have little say in how their information is used or represented, leading to technologies that primarily serve Western interests and priorities; one key area where this is evident is in natural language processing (NLP), where models such as machine translation, sentiment analysis, and speech recognition are overwhelmingly trained on English or other major European languages, leaving hundreds of millions of non-Western speakers underrepresented, and consequently, tools like Google Translate may misinterpret or mistranslate languages like Hindi, Bengali, Swahili, or Yoruba, while sentiment analysis fails to account for local idioms, sarcasm, or culturally specific emotional expressions, further marginalizing communities and reinforcing the digital divide; a similar problem exists in image and video recognition, where datasets such as ImageNet or LFW, dominated by images of white individuals, cause facial recognition technologies to misidentify darker-skinned people, disproportionately affecting African, South Asian, and Indigenous populations, resulting in wrongful arrests, surveillance targeting, or denial of services, and when applied in law enforcement or government programs in countries with weak regulatory frameworks, these errors can exacerbate social inequities rather than mitigate them; beyond technical misclassifications, algorithmic bias also manifests in cultural misrepresentation, as AI systems trained on Western norms often fail to accommodate non-Western concepts of identity, gender, and social behavior, for example, gender recognition algorithms that assume binary classifications cannot account for the Hijra communities in South Asia, Indigenous two-spirit identities in North America, or other culturally recognized third-gender categories, which leads to erasure or misidentification of entire communities; additionally, many non-Western epistemologies, traditions, and knowledge systems are largely invisible to global AI datasets, meaning that critical Indigenous ecological knowledge, community-based disaster management practices, or alternative approaches to healthcare may not be incorporated into AI models, causing solutions to problems like climate adaptation, resource allocation, or medical diagnostics to ignore locally relevant insights and reinforce a narrow Western scientific paradigm; the consequences of these biases are extensive and multifaceted, affecting social, economic, political, and health domains; economically, automated hiring systems trained on Western educational and occupational norms may undervalue candidates from non-Western contexts with local degrees, non-English resumes, or culturally specific experiences, leading to exclusion from employment opportunities, while credit-scoring algorithms that rely on Western-style financial histories may deny loans to millions who lack formal banking documentation, effectively entrenching financial inequalities; politically, biased AI can reinforce authoritarian control, as seen in surveillance programs using facial recognition or predictive policing in countries where minorities are already marginalized, such as the targeting of Uyghur Muslims in Xinjiang, China, where imported AI technologies enable ethnic profiling, monitor religious practices, and restrict freedoms, demonstrating that algorithmic bias is not merely a technical issue but a tool that can amplify systemic oppression; in healthcare, diagnostic algorithms trained primarily on datasets from Western patients struggle to identify diseases on darker skin tones or predict outcomes in populations with different genetic markers, while disaster response and predictive modeling systems may underperform in non-Western regions if local environmental or social knowledge is excluded, increasing vulnerability during crises; the digital erasure of minority cultures is another insidious outcome, as young users interacting primarily with AI systems designed around Western norms may gradually internalize these norms, leading to loss of language, cultural practices, and historical knowledge over time, a process that undermines cultural preservation and diversity; addressing these challenges requires a multifaceted and localized approach that begins with diversifying datasets to include non-Western languages, images, cultural content, and social realities, efforts exemplified by initiatives such as Masakhane, which focuses on African NLP, and IndicNLP, which develops resources for South Asian languages, though these projects require more international support, resources, and integration into global AI research; participatory AI design is equally critical, involving local communities directly in data collection, model development, and evaluation to ensure systems reflect lived experiences and cultural norms, rather than imposing Western categories on non-Western societies; regulatory frameworks tailored to local contexts are necessary to ensure ethical AI deployment, protect citizen rights, and prevent exploitative technologies, as reliance on Western legislation like the EU AI Act is insufficient for countries with distinct socio-cultural realities, limited technological infrastructure, and differing governance structures; at a deeper level, scholars advocate for decolonizing AI, which involves challenging the dominance of Western epistemologies in defining what counts as knowledge and technological progress, valuing Indigenous, African, Asian, and Latin American perspectives in the construction of datasets, algorithms, and AI-driven solutions, thereby promoting a more inclusive, pluralistic approach to technology that respects global diversity rather than perpetuating historical inequities; ultimately, algorithmic bias in non-Western contexts is not just a technical problem but a reflection of structural inequalities that, if left unaddressed, can exacerbate social exclusion, political repression, economic disparities, and cultural erasure, but through inclusive data practices, participatory design, local governance, and the acknowledgment of diverse epistemologies, AI can be reshaped to serve multiple human experiences equitably, moving away from being a tool of digital colonialism toward becoming a platform for cross-cultural empowerment, justice, and innovation, ensuring that the benefits of AI are shared widely and do not reinforce global hierarchies that have historically marginalized non-Western societies.

Conclusion

As AI becomes increasingly embedded in global decision-making, the risks of algorithmic bias in non-Western contexts cannot be ignored. While Western debates often focus on fairness and transparency, non-Western contexts demand broader questions of justice, sovereignty, and cultural survival. The future of AI must be pluralistic, inclusive, and reflective of diverse human experiences. Otherwise, technology will only replicate the inequalities of the past.

Q&A Section

Q1: What is algorithmic bias, and why is it worse in non-Western contexts?

Ans: Algorithmic bias refers to systematic errors in AI that disadvantage certain groups due to flawed data or design. In non-Western contexts, it is worse because datasets are often Western-centric, underrepresenting local languages, cultures, and communities.

Q2: How does data colonialism contribute to bias?

Ans: Data colonialism occurs when Western corporations dominate the collection, classification, and exploitation of global data, ignoring non-Western perspectives. This creates technologies that serve Western interests while marginalizing others.

Q3: What are examples of consequences in non-Western societies?

Ans: Consequences include facial recognition misidentifying African or Asian individuals, healthcare AI misdiagnosing diseases on darker skin, exclusion from financial services, and the digital erasure of minority cultures.

Q4: Can algorithmic bias affect politics in non-Western nations?

Ans: Yes. Predictive policing and facial recognition systems are often deployed in authoritarian contexts, disproportionately targeting minorities and enabling state surveillance.

Q5: What solutions can reduce algorithmic bias in non-Western contexts?

Ans: Solutions include diversifying datasets, designing AI with community participation, implementing local AI regulations, and decolonizing AI by valuing non-Western knowledge systems.

Similar Articles

Find more relatable content in similar Articles

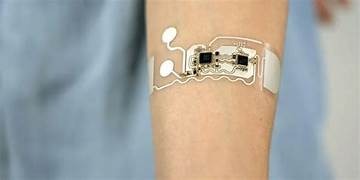

Wearable Health Sensors: The D..

Wearable health sensors are re.. Read More

Data Centers and the Planet: M..

As cloud computing becomes the.. Read More

Digital DNA: The Ethics of Gen..

Digital DNA—the digitization a.. Read More

Protecting Kids in the Digital..

In an increasingly connected w.. Read More

Explore Other Categories

Explore many different categories of articles ranging from Gadgets to Security

Smart Devices, Gear & Innovations

Discover in-depth reviews, hands-on experiences, and expert insights on the newest gadgets—from smartphones to smartwatches, headphones, wearables, and everything in between. Stay ahead with the latest in tech gear

Apps That Power Your World

Explore essential mobile and desktop applications across all platforms. From productivity boosters to creative tools, we cover updates, recommendations, and how-tos to make your digital life easier and more efficient.

Tomorrow's Technology, Today's Insights

Dive into the world of emerging technologies, AI breakthroughs, space tech, robotics, and innovations shaping the future. Stay informed on what's next in the evolution of science and technology.

Protecting You in a Digital Age

Learn how to secure your data, protect your privacy, and understand the latest in online threats. We break down complex cybersecurity topics into practical advice for everyday users and professionals alike.

© 2025 Copyrights by rTechnology. All Rights Reserved.