Voice Emotion Analysis: Can Technology Really Hear How We Feel?

Voice Emotion Analysis leverages AI and acoustic science to detect human feelings from speech, opening new opportunities in mental health, customer service, and human-computer interaction. While it can recognize basic emotions, subtle cues remain a challenge, and accuracy depends on context and cultural factors.

✨ Raghav Jain

Introduction

Our voice is more than just words—it carries emotion, intention, and subtle cues that reveal how we truly feel. From excitement to sadness, anger to calm, these emotional signals are embedded in pitch, tone, pace, and volume. But in today’s digital world, technology is learning to detect these emotions automatically through voice emotion analysis.

Voice emotion analysis, sometimes called speech emotion recognition, uses artificial intelligence (AI), machine learning, and acoustic analysis to “read” emotional states from speech. Companies, healthcare providers, and researchers are exploring its potential to improve mental health, customer service, security, and even human-computer interaction.

In this article, we’ll explore how voice emotion analysis works, its applications, limitations, ethical considerations, and practical implications. We’ll also discuss how this technology could shape the future of communication and emotional understanding. Voice is one of the most intimate expressions of human experience. From a casual “hello” to an impassioned speech, the tone, pitch, and rhythm of our voice convey much more than the words themselves. Researchers have long known that emotions—joy, anger, fear, sadness, and more—manifest subtly in the way we speak. What was once an abstract observation is now becoming a field of technological innovation: voice emotion analysis. But can technology truly understand how we feel, or are we oversimplifying the complexities of human emotion?

Voice emotion analysis refers to the use of artificial intelligence (AI) and machine learning to detect emotional states from speech. By analyzing features such as pitch variation, speech rate, volume, and intonation, algorithms attempt to classify emotions into categories like happiness, sadness, anger, or anxiety. This technology has applications in customer service, healthcare, security, education, and even personal wellness. Companies envision systems that can gauge customer satisfaction, detect stress levels, or provide emotional support based on vocal cues. The potential is vast, but the challenges are equally formidable.

One reason voice emotion analysis is appealing is that our voice is an unobtrusive marker of emotion. Unlike facial expressions, which require visibility, or physiological signals, which may require wearable devices, speech is often available in real-time. AI models can process recordings and deliver insights quickly. For example, call centers can use emotion detection to identify frustrated customers, prompting agents to adjust their approach. Similarly, mental health applications can monitor changes in a person’s emotional tone over time, potentially offering early warning signs of depression or anxiety. The appeal is that voice analysis can create a continuous, passive means of emotional assessment without the need for explicit input from individuals.

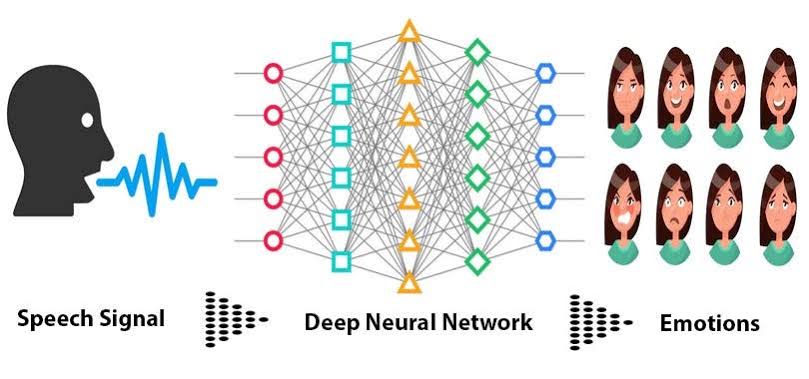

The science behind voice emotion analysis relies on understanding how emotions influence vocal cords and speech production. When a person is stressed or angry, their voice may become higher-pitched and faster, with sharper intonation. Sadness often slows speech and lowers pitch, while joy might produce a lighter, more varied tone. Machine learning algorithms are trained on large datasets of annotated speech, where human experts label audio clips according to perceived emotion. The algorithms then learn patterns that correlate vocal features with emotional labels, theoretically enabling them to classify emotions in new, unlabeled audio.

Despite the advances, the accuracy of these systems is far from perfect. Human emotion is nuanced and context-dependent. The same vocal feature can signal different emotions depending on cultural background, individual personality, or situational context. For instance, a raised pitch might indicate excitement in one context and fear in another. Similarly, some people naturally speak in a monotone voice regardless of their feelings, which can mislead algorithms. These limitations reveal a fundamental challenge: while technology can detect patterns, it cannot fully grasp the subjective, layered experience of emotion.

Bias is another concern in voice emotion analysis. Most AI systems rely on datasets that are limited in terms of language, accent, age, and gender diversity. If the training data predominantly reflects one demographic, the system may misinterpret the voices of others, leading to unfair or inaccurate outcomes. For example, research has shown that emotion detection algorithms trained on adult voices often perform poorly on children’s speech. Addressing these biases requires collecting diverse datasets, applying fairness-aware machine learning methods, and continuously testing systems across multiple populations.

Ethical questions also arise when technology can monitor emotions. Continuous emotion detection could be used for surveillance, manipulative marketing, or workplace monitoring in ways that infringe on personal privacy. Imagine a scenario where employers track the stress levels of employees without consent or a system that targets vulnerable individuals with emotionally charged advertising. While voice emotion analysis can have therapeutic or supportive applications, its misuse could exacerbate concerns about data privacy and consent. Therefore, regulatory frameworks and clear ethical guidelines are critical if this technology is to be deployed responsibly.

Despite these challenges, ongoing research continues to improve the sophistication of voice emotion analysis. Multimodal approaches, which combine speech with facial expressions, body language, and physiological signals, aim to create a more holistic understanding of emotion. Advances in deep learning, particularly in recurrent neural networks and transformer architectures, have enhanced the ability to capture temporal patterns in speech, making emotion recognition more accurate. Moreover, real-time processing and cloud computing have made it feasible to implement emotion analysis in everyday applications, from virtual assistants to telehealth platforms.

The question remains, however, whether machines can truly “understand” emotion or merely simulate understanding. Emotions are inherently subjective, deeply rooted in personal experience, memory, and context. While AI can identify patterns and correlate them with labels, it does not experience emotion. This distinction is crucial because interpreting emotional data requires empathy, judgment, and cultural awareness—qualities that remain uniquely human. Technology can assist and augment our understanding, but it cannot replace the human insight that often guides emotional interpretation.

In conclusion, voice emotion analysis represents a fascinating intersection of technology and human psychology. It offers promising applications in healthcare, customer service, education, and personal wellness, providing insights that were once inaccessible. Yet, its limitations and ethical implications remind us that emotions are not simply patterns to be decoded. While technology can detect signals of how we feel, it cannot fully comprehend the richness and depth of human experience. As voice emotion analysis continues to evolve, it must be developed thoughtfully, combining scientific rigor with ethical consideration, to enhance human life without compromising the very qualities that make us human.

Understanding Voice Emotion Analysis

Voice emotion analysis works by detecting patterns in speech that correspond to different emotions. These patterns can include:

- Pitch: Higher pitch may indicate excitement or fear, while lower pitch often signals sadness or calm.

- Tone: Warm, soft, or harsh tones reveal underlying mood and attitude.

- Speech Rate: Fast speech can indicate stress, nervousness, or excitement; slow speech may signal sadness or fatigue.

- Volume: Loudness can reflect anger or urgency; whispering might indicate secrecy or discomfort.

- Pauses and Rhythm: Hesitations or irregular speech rhythms often reveal uncertainty or anxiety.

AI systems process these signals using algorithms trained on vast datasets of voice recordings labeled with emotional states. They can categorize emotions into basic types such as happiness, sadness, anger, fear, disgust, surprise, or even detect more nuanced moods like boredom, stress, or empathy.

Why Voice Emotion Matters

Humans naturally use vocal cues to understand feelings. But technology can help amplify this understanding:

Healthcare Applications:

Voice emotion analysis can detect stress, depression, or anxiety by analyzing speech patterns during calls or therapy sessions. Early detection allows timely intervention.

Customer Service:

Call centers can use emotion detection to gauge customer satisfaction or frustration, allowing agents to respond more empathetically.

Human-Computer Interaction:

Virtual assistants, chatbots, and AI companions can adjust responses based on detected emotions, creating more natural and personalized interactions.

Security and Forensics:

Voice analysis can assist in lie detection, identifying suspicious behavior, or analyzing emotional states in high-stress situations.

Education and Training:

Teachers or online learning platforms can gauge student engagement or confusion through speech analysis during classes or discussions.

How Technology Reads Emotions

Voice emotion analysis combines acoustic feature extraction and machine learning models:

- Acoustic Features: The software examines speech parameters such as pitch, loudness, tempo, timbre, and spectral qualities.

- Machine Learning Algorithms: Using large datasets, AI learns to associate specific speech patterns with emotional states. Common techniques include neural networks, support vector machines, and deep learning models.

- Contextual Analysis: Some advanced systems consider linguistic context, detecting words, phrases, and sentence structure to improve accuracy.

- Real-Time Processing: Modern systems can analyze emotions live during conversations, offering instant feedback.

This process allows computers to “hear” emotions with increasing accuracy, though it is still far from perfect.

Limitations and Challenges

While promising, voice emotion analysis faces several challenges:

Cultural and Linguistic Differences:

Emotional expression varies widely across cultures and languages. A tone indicating anger in one culture may be neutral in another.

Individual Variation:

People express emotions differently. One person’s excitement may sound like stress to the system, leading to misinterpretation.

Background Noise and Audio Quality:

Poor recording environments, echoes, or low-quality microphones reduce the accuracy of emotion detection.

Complex Emotions:

Humans often feel multiple emotions at once. Subtle emotions like sarcasm, irony, or mixed feelings are difficult for AI to detect reliably.

Ethical Concerns:

Voice emotion analysis raises privacy issues. Constant monitoring of conversations could feel intrusive or be misused for manipulation. Transparency, consent, and ethical guidelines are crucial.

Applications in Daily Life

Mental Health Monitoring:

Apps can monitor mood trends over time by analyzing daily speech patterns, helping users track mental well-being.

Smart Devices:

AI assistants like Alexa or Siri could respond differently when detecting stress or urgency, offering reassurance or adjusting commands.

Customer Experience:

Businesses can detect frustrated customers early and provide proactive support, reducing negative experiences.

Personal Communication:

Voice analysis could help individuals understand their own emotional patterns, improving communication and self-awareness.

Gaming and Entertainment:

Emotion-sensitive games or interactive stories could adapt based on players’ vocal expressions, creating immersive experiences.

Practical Steps to Engage with Voice Emotion Technology

Even if you’re not a developer, you can experiment with or benefit from voice emotion tools:

Use Mood-Tracking Apps:

Apps with voice input can help monitor emotional health. Speak into the app daily to get trends and suggestions.

Experiment with Virtual Assistants:

Notice how tone and speed affect AI responses. Some systems adjust volume or phrasing based on detected mood.

Educational Tools:

Teachers or students can use software to gauge engagement or stress during oral presentations.

Self-Awareness Exercises:

Record yourself speaking on different topics and play back to identify emotional cues in your own voice.

Pet and Caregiver Monitoring:

Voice emotion tech is being explored to detect distress or discomfort in non-verbal humans, like toddlers or elderly patients.

Daily Practices to Understand and Use Voice Emotion

Morning Routine

- Record a short voice diary reflecting on feelings

- Notice pitch and tone variations

- Play back recording to increase emotional self-awareness

Midday Routine

- Engage with voice-based AI apps

- Experiment with tone, pace, and volume

- Observe AI response changes and insights

Evening Reflection

- Reflect on conversations of the day

- Note moments of frustration, excitement, or calm

- Consider using apps for tracking patterns over time

Weekly Practices

- Test different voice emotion apps for accuracy

- Practice speaking slowly and mindfully to express emotion clearly

- Record long conversations to analyze emotional consistency

- Use insights for better communication in personal and professional life

Common Misconceptions About Voice Emotion Analysis

“AI can read my mind through my voice.”

→ False. Technology analyzes vocal patterns, not thoughts. It detects probable emotions based on acoustic cues.

“Voice emotion analysis is 100% accurate.”

→ Not true. Accuracy depends on recording quality, context, and the system used.

“It’s only for tech experts or researchers.”

→ Absolutely not. Many apps and tools are accessible to anyone for self-awareness, customer service, or healthcare monitoring.

“It replaces human intuition.”

→ False. It complements human understanding but cannot fully replace empathy and judgment.

The Future of Voice Emotion Technology

Experts predict that voice emotion analysis will continue to evolve:

- Integration with Wearables: Smart earbuds or wrist devices could detect stress or fatigue via vocal cues in real-time.

- Mental Health Interventions: Therapy apps could use voice signals to suggest meditation, breathing exercises, or cognitive behavioral tips.

- Enhanced AI Companions: Virtual assistants and social robots may respond with empathy, making digital interactions feel more human.

- Security and Fraud Detection: Emotional cues could aid in detecting deception or suspicious behavior in high-risk environments.

While exciting, these developments require strong ethics, transparency, and user consent to prevent misuse.

Conclusion

Voice emotion analysis shows us that our feelings are audible—not just invisible internal states. Technology can detect stress, joy, anger, or sadness through our vocal patterns, offering powerful tools for healthcare, communication, education, and customer service.

However, it’s not perfect. Cultural differences, individual variations, and subtle emotions challenge AI systems. Privacy, consent, and ethical guidelines are essential to ensure this technology benefits society rather than intrudes on it.

By becoming aware of our own vocal patterns, experimenting with AI tools, and using these insights responsibly, we can improve communication, emotional intelligence, and even mental well-being.

Next time you speak, remember: your voice carries more than words—it carries your emotions. And soon, technology may be listening, learning, and helping you understand them better.

Speak with awareness. Observe emotions. Embrace the technology responsibly

Q&A Section

Q1:- What is Voice Emotion Analysis (VEA)?

Ans :- VEA is a technology that uses AI and signal processing to detect human emotions from vocal cues like tone, pitch, speed, and volume, providing insights into feelings beyond spoken words.

Q2:- How does VEA actually “hear” emotions?

Ans :- By analyzing variations in speech patterns, intonation, and rhythm, VEA identifies emotional markers, mapping them to categories such as happiness, sadness, anger, or stress.

Q3:- What technologies power voice emotion recognition?

Ans :- AI, machine learning, natural language processing, and acoustic signal analysis work together to process voice data and classify emotional states accurately.

Q4:- Can VEA distinguish between subtle emotions like sarcasm or nervousness?

Ans :- Modern systems can detect some subtle cues, but recognizing complex emotions like sarcasm remains challenging due to cultural differences and context dependence.

Q5:- Where is voice emotion analysis used today?

Ans :- VEA is applied in call centers, mental health apps, virtual assistants, market research, security monitoring, and customer experience enhancement.

Q6:- Is VEA always accurate in detecting emotions?

Ans :- Accuracy varies with language, accent, environment, and vocal nuances. While technology is improving, misinterpretation of emotions is still possible.

Q7:- How does VEA benefit mental health and therapy?

Ans :- It provides therapists with objective insights into patients’ emotional states, enabling early stress detection, monitoring therapy progress, and supporting personalized care.

Q8:- Can voice emotion analysis impact workplace or customer experiences?

Ans :- Yes, it can help managers gauge employee morale, improve training, or personalize customer interactions, leading to more empathetic and effective engagement.

Q9:- Are there privacy concerns with VEA?

Ans :- Yes, analyzing personal voice data raises ethical issues, including consent, misuse, surveillance risks, and potential emotional profiling without awareness.

Q10:- What is the future of voice emotion analysis?

Ans :- VEA is likely to integrate more deeply with AI assistants, healthcare devices, smart home systems, and human-computer interaction, becoming more accurate and context-aware.

Similar Articles

Find more relatable content in similar Articles

Why Mesh Networks Might Replac..

Mesh networks are transforming.. Read More

How Blockchain Is Reinventing ..

Blockchain is transforming cyb.. Read More

Foldable Screens in 2025: Are ..

Foldable screens have finally .. Read More

Wearable Health Sensors: The D..

Wearable health sensors are re.. Read More

Explore Other Categories

Explore many different categories of articles ranging from Gadgets to Security

Smart Devices, Gear & Innovations

Discover in-depth reviews, hands-on experiences, and expert insights on the newest gadgets—from smartphones to smartwatches, headphones, wearables, and everything in between. Stay ahead with the latest in tech gear

Apps That Power Your World

Explore essential mobile and desktop applications across all platforms. From productivity boosters to creative tools, we cover updates, recommendations, and how-tos to make your digital life easier and more efficient.

Tomorrow's Technology, Today's Insights

Dive into the world of emerging technologies, AI breakthroughs, space tech, robotics, and innovations shaping the future. Stay informed on what's next in the evolution of science and technology.

Protecting You in a Digital Age

Learn how to secure your data, protect your privacy, and understand the latest in online threats. We break down complex cybersecurity topics into practical advice for everyday users and professionals alike.

© 2025 Copyrights by rTechnology. All Rights Reserved.