Low-resource AI: making powerful models work on weak devices (e.g. rural settings, IoT sensors).

"Exploring Low-Resource AI: Techniques, Applications, and Future Directions for Deploying Powerful Artificial Intelligence Models on Devices with Limited Memory, Processing Power, Energy, and Connectivity, Enabling Rural Communities, IoT Sensors, and Edge Devices to Access Smart, Efficient, and Real-Time AI Solutions for Agriculture, Healthcare, Environmental Monitoring, Education, and Industrial Applications While Bridging the Digital Divide Globally."

✨ Raghav Jain

Low-Resource AI: Making Powerful Models Work on Weak Devices (e.g., Rural Settings, IoT Sensors)

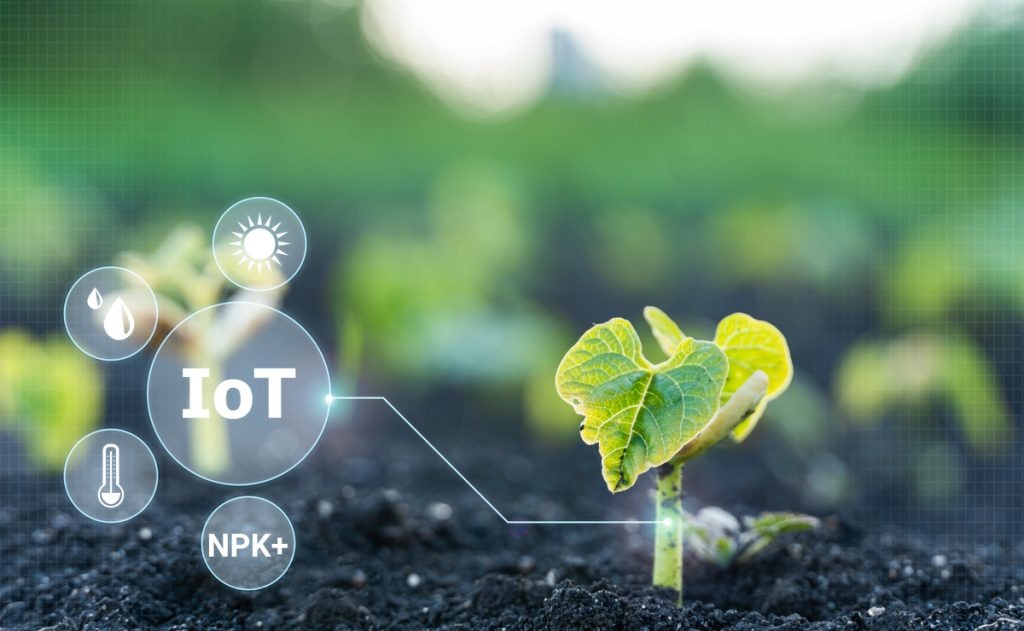

Artificial Intelligence (AI) is no longer confined to advanced laboratories or urban technology hubs. It is quickly spreading across industries, geographies, and social boundaries, shaping everything from education to healthcare and agriculture. Yet, the widespread adoption of AI is often challenged by the computational demands of advanced models. Deep learning systems, large language models, and advanced vision networks require massive amounts of data and compute power—resources that are simply not available in low-resource environments. These environments include rural communities with limited internet bandwidth, inexpensive IoT devices with restricted processing capacity, and energy-constrained edge devices like environmental sensors, wearables, or drones.

The challenge is clear: How do we make powerful AI models accessible and functional on weak devices? This is where the domain of low-resource AI comes in. It focuses on designing, compressing, and optimizing AI systems so that they can run efficiently with limited memory, computation, power, or connectivity. In this article, we will explore the principles, techniques, real-world applications, and future outlook of low-resource AI, with a special focus on rural settings and IoT ecosystems.

1. The Challenge of Low-Resource AI

Most state-of-the-art AI models today, such as GPT-based language systems or vision transformers, have billions of parameters and require GPUs or TPUs for training and inference. Running such models on devices with only a few megabytes of RAM or limited battery capacity is virtually impossible without adaptation. The key barriers include:

- Hardware Constraints: IoT sensors, low-cost smartphones, and embedded systems typically lack dedicated GPUs. They may only have kilobytes to megabytes of memory and limited processing cycles.

- Connectivity Gaps: Rural settings often suffer from low or intermittent internet access, making cloud-based AI services unreliable.

- Energy Efficiency: In regions where electricity supply is unstable, or for devices that must operate for months on a single battery, energy-hungry AI algorithms are unsustainable.

- Data Scarcity: Many rural applications do not have access to large, labeled datasets needed to train AI models effectively.

Overcoming these challenges requires rethinking AI architectures and deployment strategies so that models can operate efficiently in constrained environments without losing much accuracy or usefulness.

2. Techniques to Enable Low-Resource AI

A variety of techniques have emerged to adapt powerful AI systems for weak devices. These techniques balance the trade-off between accuracy, efficiency, and resource utilization.

2.1 Model Compression

- Pruning: Removing redundant weights and connections in a neural network to reduce model size while maintaining accuracy.

- Quantization: Representing weights and activations with lower precision (e.g., 8-bit integers instead of 32-bit floats). This significantly reduces memory use and speeds up computation.

- Knowledge Distillation: Training a smaller "student" model to mimic the predictions of a larger "teacher" model, thereby retaining most of the performance with fewer parameters.

2.2 Edge AI and TinyML

- TinyML refers to machine learning models optimized for microcontrollers and sensors. They can run on devices with less than 1 MB of memory and minimal power.

- Edge AI deploys models directly on local devices, reducing reliance on cloud computation and enabling real-time inference.

2.3 Federated and On-Device Learning

- Instead of sending raw data to the cloud, federated learning enables devices to train models locally and only share updates. This reduces bandwidth usage and enhances privacy.

- On-device incremental learning allows small updates to the AI system without retraining the entire model.

2.4 Efficient Architectures

- Lightweight networks like MobileNet, SqueezeNet, and EfficientNet are specifically designed for low-resource environments.

- Transformer models are being re-engineered into compact versions such as DistilBERT and TinyBERT for NLP tasks.

2.5 Hardware-Aware Optimization

- Some chips are being designed specifically for AI at the edge, such as Google’s Edge TPU, ARM’s Cortex-M microcontrollers, and NVIDIA’s Jetson Nano.

- These specialized processors accelerate inference while consuming minimal energy.

3. Applications in Rural and IoT Settings

Low-resource AI has transformative potential in settings where high-end hardware is unavailable. Some notable applications include:

3.1 Agriculture in Rural Areas

- Pest Detection: TinyML-enabled cameras can identify crop diseases or pest infestations using small vision models running on solar-powered sensors.

- Smart Irrigation: AI embedded in soil sensors can predict optimal watering times without needing continuous internet access.

- Yield Forecasting: Farmers can use lightweight mobile apps powered by efficient AI to estimate crop yield and make informed planting decisions.

3.2 Healthcare in Remote Locations

- Diagnostics: Portable devices with low-resource AI can detect conditions like malaria or tuberculosis using microscopic images analyzed offline.

- Wearables: Inexpensive fitness bands and health monitors running AI locally can track vital signs and provide alerts in areas lacking hospitals.

- Telemedicine: AI-powered apps on low-end smartphones can guide basic diagnosis before connecting patients with doctors remotely.

3.3 IoT Ecosystems

- Environmental Monitoring: Sensors running TinyML can detect forest fires, air quality, or water pollution, transmitting only critical alerts to conserve bandwidth.

- Smart Homes in Low-Power Areas: Energy-efficient AI can run on simple controllers to optimize power usage and security in rural households.

- Predictive Maintenance: Small AI models on industrial IoT devices can predict equipment failures in rural factories or farms without cloud dependency.

3.4 Education and Community Development

- AI-powered offline educational apps on basic smartphones can provide language translation, tutoring, and interactive learning tools without requiring internet.

- Community radios or smart kiosks in villages can run localized AI models for weather updates, crop prices, or government services.

4. Case Studies and Examples

- Google’s TensorFlow Lite for Microcontrollers: This framework allows models to run on devices with as little as 16 KB of RAM, empowering rural IoT deployments.

- FarmBeats (Microsoft): Uses edge computing and AI to provide affordable digital agriculture solutions in rural areas with low connectivity.

- Fitbit and Xiaomi Wearables: These devices already employ lightweight AI to provide health insights without requiring continuous cloud access.

- TinyML for Wildlife Protection: Acoustic sensors with embedded AI identify poaching activities or endangered animal calls in remote forests.

5. The Future of Low-Resource AI

As AI continues to evolve, several trends will further enhance its applicability in weak-device environments:

- Automated Model Compression: AI systems themselves will automatically optimize models for deployment on specific hardware.

- Neuromorphic Computing: Brain-inspired chips will allow ultra-low-power AI operations suitable for continuous sensing in rural and IoT applications.

- Hybrid Edge-Cloud AI: Systems will dynamically balance between edge processing and cloud resources depending on connectivity availability.

- Open-Source Ecosystems: Widespread sharing of optimized lightweight AI architectures will accelerate adoption in resource-poor settings.

Ultimately, low-resource AI is not just a technical challenge—it is a bridge to democratizing AI access across the world. By enabling powerful models to work on weak devices, we can ensure that AI benefits reach even the most remote and underserved populations.

Conclusion

Low-resource AI focuses on making advanced machine learning models run efficiently in constrained environments such as rural communities, IoT sensors, and low-end devices. Through techniques like pruning, quantization, knowledge distillation, TinyML, and edge AI, powerful models can be adapted to function on hardware with limited memory, computation, and energy.

The applications are vast: from crop disease detection in rural farms, to portable diagnostic tools in healthcare, to environmental monitoring through IoT devices. Case studies like TensorFlow Lite, FarmBeats, and TinyML in wildlife conservation highlight the practicality of these approaches.

Looking forward, innovations in neuromorphic computing, hybrid edge-cloud systems, and open-source AI ecosystems will further advance the reach of low-resource AI. Ultimately, making AI accessible on weak devices is not only a technological necessity but also a social imperative—ensuring that no community is left behind in the AI revolution.

Artificial Intelligence is rapidly reshaping industries and societies, but its transformative potential faces a significant barrier when it comes to low-resource environments such as rural communities, low-end smartphones, IoT sensors, or battery-powered devices where computational power, memory, bandwidth, and energy are severely limited, and the challenge of running modern AI models, which often require billions of parameters and high-end GPUs or TPUs, becomes daunting, raising the central question of how to make powerful models functional on weak devices, and the field of low-resource AI is addressing this by developing techniques to compress, optimize, and re-architect machine learning systems so they can perform meaningful tasks without requiring extensive computational or energy resources, and the importance of this cannot be overstated because rural farmers, healthcare workers in remote villages, or environmental scientists using IoT devices in forests cannot rely on constant cloud connectivity or expensive hardware, which is why strategies such as model compression through pruning redundant weights, quantization that reduces floating-point precision to smaller integer formats, and knowledge distillation that allows a compact student model to mimic a large teacher model are gaining traction, alongside the rise of TinyML, a movement focused on deploying ML on microcontrollers with kilobytes of memory, and Edge AI which enables models to run directly on devices rather than relying on the cloud, making them resilient against connectivity gaps and capable of delivering real-time insights, while federated learning adds another dimension by training models locally on devices and only sharing updates with a central server to reduce data transfer, bandwidth use, and privacy risks, and in parallel researchers are designing efficient architectures like MobileNet, SqueezeNet, EfficientNet, and lightweight transformers such as DistilBERT and TinyBERT for natural language processing, while hardware advances such as ARM Cortex-M processors, Google’s Edge TPU, and NVIDIA Jetson Nano are tailored to accelerate AI workloads in constrained environments, and these optimizations open doors for countless applications that can revolutionize rural and IoT contexts, such as in agriculture where AI-powered cameras running TinyML can detect crop diseases or pest infestations on solar-powered devices, soil sensors with embedded AI can optimize irrigation schedules without internet, and mobile apps with compressed models can help farmers forecast yield, in healthcare where portable diagnostic kits powered by AI can analyze images to detect malaria or tuberculosis offline, inexpensive wearables can track vitals to alert health workers in regions with limited hospital access, and AI-based mobile apps can support telemedicine by offering preliminary diagnosis on low-end smartphones before connecting to a doctor, and in environmental monitoring where IoT sensors can identify air pollution, forest fires, or water contamination using on-device inference and only send alerts when necessary to conserve bandwidth, while in education AI-powered offline tutoring apps and language translators can empower rural children with learning resources even in low-bandwidth areas, and community kiosks or smart radios can use lightweight AI models to broadcast local weather, crop prices, or government schemes, and in industry predictive maintenance systems running on low-cost IoT devices can detect early signs of equipment failure in remote factories or farms, making AI not just a luxury but a backbone of sustainability, and these ideas are not just theoretical but demonstrated in real-world projects such as Google’s TensorFlow Lite for Microcontrollers which allows models to run in as little as 16KB of RAM, Microsoft’s FarmBeats that uses edge computing for digital agriculture solutions in low-connectivity rural areas, Fitbit and Xiaomi wearables that employ lightweight AI for health insights without cloud dependency, and wildlife conservation projects that deploy acoustic sensors embedded with TinyML to detect poaching or endangered species calls in remote forests, and while these solutions show promise the field is continuously evolving towards even more efficient and scalable approaches such as automated model compression pipelines that intelligently reduce model complexity depending on device specifications, neuromorphic computing inspired by brain-like efficiency enabling ultra-low power continuous AI sensing, hybrid edge-cloud AI where systems dynamically switch between local and remote computation depending on network availability, and open-source ecosystems that democratize access by sharing optimized models for diverse hardware, all of which point toward a future where the digital divide shrinks significantly because AI will not remain the privilege of high-tech hubs but become accessible to every village, farm, school, and small enterprise around the world, and this democratization has profound social and economic implications because access to AI-enabled healthcare, education, and agriculture can uplift entire communities, reduce inequality, and support sustainability goals, while also empowering the next generation of innovators in low-resource settings to build solutions for their own unique challenges, and thus low-resource AI represents not just a technical field but a movement towards inclusive and equitable technology that brings powerful intelligence to weak devices and ensures that the benefits of AI are shared universally rather than concentrated in a few regions with abundant compute power.

Artificial Intelligence has transformed industries, healthcare, agriculture, and communication, but despite its immense potential, the widespread adoption of AI faces significant challenges in low-resource environments such as rural communities, low-end smartphones, embedded IoT devices, and other hardware-constrained systems, where computational power, memory, storage, energy, and network connectivity are severely limited, making it difficult to deploy large-scale models that typically require high-performance GPUs or cloud infrastructure, and this gap has given rise to the field of low-resource AI, which focuses on designing, optimizing, and compressing machine learning models so they can function effectively on weak devices without sacrificing too much accuracy or functionality, and one of the most prominent techniques in this domain is model compression, which includes pruning redundant neural network weights, quantization to reduce floating-point precision to lower-bit integers, and knowledge distillation, in which a smaller “student” model learns to replicate the predictions of a larger, more complex “teacher” model, all of which reduce model size, memory footprint, and computation requirements while retaining essential performance, alongside these, the rise of TinyML has enabled machine learning on microcontrollers and extremely low-power devices, allowing AI to run with kilobytes of RAM and minimal energy consumption, while Edge AI complements this by moving computation from the cloud to the device itself, reducing dependency on internet connectivity, lowering latency, and enhancing privacy since sensitive data does not need to leave the device, further innovations such as federated learning allow multiple devices to train a global model collaboratively by only sharing model updates rather than raw data, which conserves bandwidth and addresses privacy concerns, and efficient network architectures like MobileNet, SqueezeNet, EfficientNet, and compact transformer models like DistilBERT and TinyBERT have been specifically designed to operate in resource-constrained environments, providing high accuracy while remaining lightweight, and at the hardware level, specialized chips such as ARM Cortex-M processors, Google Edge TPU, and NVIDIA Jetson Nano accelerate AI workloads efficiently on the edge without consuming excessive power, all of which collectively make AI feasible in scenarios that were previously considered impractical, with real-world applications spanning numerous sectors, in agriculture, for instance, TinyML-enabled cameras and sensors can detect crop diseases, monitor pest infestations, optimize irrigation schedules, and forecast yields even in rural areas without reliable internet or high-end devices, providing farmers with actionable insights that can significantly improve productivity and sustainability, similarly, in healthcare, portable diagnostic tools powered by optimized AI models can analyze medical images to detect conditions such as malaria or tuberculosis offline, wearable health devices can monitor vital signs and alert healthcare providers without continuous cloud access, and AI-enabled mobile apps on basic smartphones can offer preliminary diagnostic support in regions where doctors are scarce, in environmental monitoring, low-resource AI allows IoT sensors to detect pollution levels, forest fires, and water contamination in real time while transmitting only essential alerts to conserve bandwidth, and in education, AI-powered offline applications provide language translation, tutoring, and interactive learning experiences to students in low-connectivity areas, while community radios or kiosks can deliver locally relevant information using lightweight AI models to analyze and summarize data on crops, weather, and government programs, in industry, predictive maintenance powered by compact AI models running on low-cost sensors helps detect equipment failures in remote farms or factories, reducing downtime and costs, case studies such as Google TensorFlow Lite for Microcontrollers demonstrate that models can operate with as little as 16 KB of RAM, Microsoft FarmBeats leverages edge computing to deliver agricultural insights in low-connectivity rural settings, Fitbit and Xiaomi wearables provide health analytics on-device without constant cloud communication, and conservation efforts deploy acoustic sensors with embedded TinyML to monitor wildlife activity and prevent poaching in remote areas, the future of low-resource AI continues to be shaped by automated model optimization pipelines that intelligently compress models based on device specifications, neuromorphic computing that mimics the efficiency of biological brains to deliver ultra-low-power AI, hybrid edge-cloud systems that dynamically balance computation depending on network availability, and open-source frameworks that democratize access to optimized lightweight AI models, collectively ensuring that AI technologies are accessible even to the most underserved populations, and the social and economic implications of this are profound because enabling AI on low-resource devices can transform healthcare delivery, agricultural productivity, environmental conservation, educational outreach, and industrial efficiency in rural and remote regions, thereby reducing inequality, empowering communities to innovate locally, and contributing to global sustainability goals, ultimately, low-resource AI is not merely a technical challenge but a movement toward equitable technology, bringing powerful intelligence to weak devices, ensuring that the benefits of AI are widely distributed, and enabling a world where smart systems are not limited to high-tech urban centers but extend their reach to every village, school, farm, and small enterprise, bridging the digital divide, improving quality of life, fostering local entrepreneurship, and creating opportunities for inclusive growth while addressing critical challenges such as energy scarcity, connectivity limitations, and affordability, which positions low-resource AI as a cornerstone in the next wave of technological democratization and human development, emphasizing that AI’s impact is maximized not merely by advancing model complexity but by ensuring that these models can operate efficiently and meaningfully wherever they are most needed, and as the field progresses, continued innovation in model compression, lightweight architectures, edge computing, TinyML, federated learning, and hardware-aware optimization will further expand the reach and utility of AI, ultimately transforming previously inaccessible environments into intelligent, responsive, and data-driven ecosystems that empower individuals, communities, and industries alike, illustrating that the future of AI is not solely about building larger or more complex models but about making these models work for everyone, everywhere, on every device, regardless of resource constraints, thereby marking a paradigm shift in how we think about the deployment, accessibility, and societal impact of artificial intelligence worldwide.

Q&A Section

Q1 :- What is low-resource AI?

Ans:- Low-resource AI refers to adapting machine learning models to run efficiently on devices with limited memory, computation, energy, or internet connectivity, such as rural phones, IoT sensors, and microcontrollers.

Q2 :- How is low-resource AI different from traditional AI?

Ans:- Traditional AI often requires powerful GPUs, cloud servers, and large datasets. Low-resource AI, on the other hand, uses compression, quantization, and lightweight architectures to run models on weak devices with minimal resources.

Q3 :- What are the main techniques used in low-resource AI?

Ans:- Techniques include model pruning, quantization, knowledge distillation, TinyML, edge AI deployment, federated learning, and hardware-aware optimization.

Q4 :- Why is low-resource AI important for rural areas?

Ans:- Rural areas often lack high-end devices, stable internet, and reliable electricity. Low-resource AI ensures that critical applications like healthcare, education, and agriculture can still benefit from AI innovations.

Q5 :- Can IoT devices effectively use AI in low-resource settings?

Ans:- Yes, IoT devices can run optimized AI models locally to perform tasks like environmental monitoring, predictive maintenance, and smart irrigation without needing constant cloud access.

Similar Articles

Find more relatable content in similar Articles

E-Waste Crisis: The Race to Bu..

The rapid growth of electronic.. Read More

Virtual Reality Therapy: Heali..

Virtual Reality Therapy (VRT) .. Read More

The Future of Electric Planes ..

The aviation industry is under.. Read More

3D-Printed Organs: Are We Clos..

3D-printed organs are at the f.. Read More

Explore Other Categories

Explore many different categories of articles ranging from Gadgets to Security

Smart Devices, Gear & Innovations

Discover in-depth reviews, hands-on experiences, and expert insights on the newest gadgets—from smartphones to smartwatches, headphones, wearables, and everything in between. Stay ahead with the latest in tech gear

Apps That Power Your World

Explore essential mobile and desktop applications across all platforms. From productivity boosters to creative tools, we cover updates, recommendations, and how-tos to make your digital life easier and more efficient.

Tomorrow's Technology, Today's Insights

Dive into the world of emerging technologies, AI breakthroughs, space tech, robotics, and innovations shaping the future. Stay informed on what's next in the evolution of science and technology.

Protecting You in a Digital Age

Learn how to secure your data, protect your privacy, and understand the latest in online threats. We break down complex cybersecurity topics into practical advice for everyday users and professionals alike.

© 2025 Copyrights by rTechnology. All Rights Reserved.