Micro LLMs: Small but Mighty AI Models.

Micro LLMs: Small but Mighty AI Models – Discover how compact, efficient language models are transforming artificial intelligence by delivering powerful natural language understanding, reasoning, and generation while reducing costs, latency, and energy consumption. Explore their applications across smartphones, edge devices, healthcare, education, and customer support, and understand why these small models are reshaping the future of accessible, practical, and sustainable AI.

✨ Raghav Jain

Introduction

Artificial intelligence has rapidly evolved over the past decade, with large language models (LLMs) like GPT-4, Claude, and Gemini demonstrating remarkable capabilities in understanding, generating, and reasoning with human language. However, the tremendous scale of these models often comes with significant drawbacks: high computational requirements, energy consumption, latency issues, and prohibitive costs for both training and deployment. As industries seek more efficient AI solutions, a new trend has begun to capture attention—Micro LLMs.

Micro LLMs are compact versions of large language models, optimized to deliver high performance while drastically reducing computational overhead. Despite being smaller in size, these models are designed to be efficient, versatile, and powerful enough for real-world applications. They represent a paradigm shift in the democratization of AI, enabling startups, researchers, and even individual developers to harness advanced language capabilities without needing massive cloud infrastructure.

In this article, we will explore what Micro LLMs are, why they matter, how they work, their advantages and challenges, real-world use cases, and their future potential.

What Are Micro LLMs?

Micro LLMs are lightweight language models that retain the essential capabilities of large-scale LLMs but are optimized for efficiency. Instead of hundreds of billions of parameters, these models typically range from 100 million to a few billion parameters—making them much smaller than giants like GPT-4 (with trillions of parameters).

The goal of Micro LLMs is not to outperform massive models in every task but to strike a balance between performance, resource efficiency, and accessibility. They are designed to run on devices with limited hardware—such as laptops, smartphones, or edge servers—while still providing robust natural language understanding and generation.

Examples include:

- LLaMA 2–7B (Meta) – A compact open-source LLM for research and edge applications.

- Alpaca and Vicuna – Fine-tuned small models derived from LLaMA.

- Mistral-7B – Highly efficient and competitive despite its relatively small size.

- Phi-2 (Microsoft) – A tiny but strong reasoning-focused model.

- TinyLlama – Optimized for edge and personal devices.

Why Micro LLMs Matter

Large LLMs have proven their worth, but they face limitations that Micro LLMs address directly:

- Accessibility

- Large models require data centers with specialized hardware (e.g., GPUs/TPUs) costing millions of dollars. Micro LLMs lower the entry barrier, allowing smaller organizations to benefit from AI.

- On-Device Processing

- Micro LLMs can run directly on smartphones, tablets, and IoT devices. This enables offline use, better privacy, and faster responses without depending on cloud connectivity.

- Cost Efficiency

- Operating large models incurs massive costs due to compute power and energy. Micro LLMs significantly cut costs, making AI deployment more sustainable.

- Latency Reduction

- Because they process requests locally or on smaller servers, Micro LLMs offer quicker response times—crucial for interactive applications like chatbots and assistants.

- Environmental Impact

- Training and running large LLMs consume substantial energy. Micro LLMs reduce carbon footprints, aligning with global sustainability goals.

How Micro LLMs Work

1. Model Compression Techniques

- Quantization – Reducing the precision of model weights (e.g., from 32-bit to 8-bit or 4-bit) while maintaining accuracy.

- Pruning – Removing redundant neurons or connections without significantly affecting performance.

- Knowledge Distillation – Training a small “student” model to mimic a large “teacher” model.

2. Efficient Architectures

- Models like Mistral-7B use optimized transformer architectures that improve efficiency without bloating parameter counts.

- Sparse attention mechanisms reduce the need to compute every word-to-word interaction.

3. Fine-Tuning and Specialization

- Instead of being general-purpose, Micro LLMs are often fine-tuned for specific domains such as healthcare, customer service, or finance—boosting accuracy with fewer parameters.

Advantages of Micro LLMs

- Lightweight Deployment – Ideal for devices with limited memory and compute power.

- Cost-Effective Scaling – Companies can deploy multiple instances across servers or devices without massive investment.

- Data Privacy – On-device processing means sensitive information doesn’t need to leave user devices.

- Energy Efficiency – Reduced computational needs lower electricity usage.

- Adaptability – Easier to customize and fine-tune for specialized tasks.

Challenges and Limitations

While Micro LLMs offer many benefits, they also face challenges:

- Performance Gap – They cannot yet match the reasoning depth and creativity of trillion-parameter models.

- Bias and Safety – Smaller models may inherit biases from training data but lack the sophistication of larger safety alignment mechanisms.

- Limited Context Windows – They may struggle with processing large documents or extended conversations.

- Domain Constraints – Specialized Micro LLMs may perform poorly outside their trained field.

- Scalability Issues – For extremely complex tasks, Micro LLMs may require cloud augmentation or hybrid solutions.

Real-World Applications of Micro LLMs

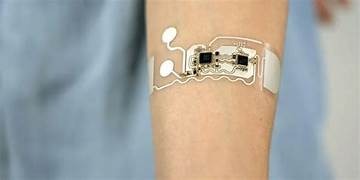

- Smartphones and Wearables

- Voice assistants, predictive text, real-time translation—all run faster and offline with Micro LLMs.

- Healthcare

- Small models can be deployed securely in hospitals for clinical decision support without sending sensitive patient data to external servers.

- Customer Support

- Companies can run domain-specific chatbots locally, cutting cloud costs and ensuring quicker response times.

- Education

- Students can access personalized AI tutors on their laptops or tablets without requiring internet connectivity.

- Edge Computing and IoT

- Smart home devices, industrial sensors, and autonomous machines benefit from on-device natural language understanding.

- Developing Regions

- Areas with limited internet access can still benefit from AI-powered services since Micro LLMs work offline.

The Future of Micro LLMs

The rise of Micro LLMs is part of a broader AI efficiency movement. As hardware continues to advance (with more powerful CPUs, GPUs, and NPUs in consumer devices), Micro LLMs will grow increasingly capable. Some future trends include:

- Hybrid Models – Combining micro LLMs for edge tasks with cloud-based large models for complex reasoning.

- Specialized Micro LLMs – Pre-trained for industries like law, medicine, or finance.

- Federated Learning – Micro LLMs on devices learning collaboratively while preserving user privacy.

- AI Everywhere – From smart appliances to AR/VR systems, Micro LLMs will enable natural interactions in daily life.

Micro LLMs: Small but Mighty AI Models have emerged as one of the most exciting advancements in artificial intelligence, representing a paradigm shift from the trend of ever-growing, resource-heavy large language models (LLMs) toward smaller, more efficient, and highly practical alternatives that balance performance with accessibility. Large models like GPT-4 and Gemini dominate headlines for their trillion-parameter capabilities, but they come with staggering computational requirements, high costs, long latencies, and energy consumption that make them impractical for many organizations, developers, and end-users. Micro LLMs, by contrast, are designed to retain much of the linguistic fluency, reasoning ability, and versatility of their massive counterparts while dramatically reducing the number of parameters—often ranging from 100 million to a few billion—so they can run smoothly on laptops, smartphones, and even embedded IoT devices. They achieve this efficiency through several techniques such as quantization (reducing weight precision from 32-bit to 8-bit or even 4-bit), pruning (eliminating redundant connections without degrading performance), knowledge distillation (training a small student model to replicate the behavior of a large teacher model), and optimized transformer architectures like those seen in Mistral-7B or Microsoft’s Phi-2, which outperform expectations despite their compact size. This makes Micro LLMs not just smaller, but also smarter in terms of deployment practicality. Their importance lies in democratization: they remove the barriers of cost and infrastructure that previously limited advanced AI to tech giants with massive GPU clusters. A startup can deploy a chatbot fine-tuned on a 1–3 billion parameter model without breaking the bank; a hospital can run a privacy-preserving model on local servers to analyze medical notes securely; and users in remote areas can access translation, tutoring, or digital assistance offline on a mobile device without internet connectivity. Beyond accessibility, Micro LLMs also address latency and environmental concerns—because they can process requests locally, responses are near-instant, and energy consumption is drastically lower, aligning with global sustainability goals. Their applications are far-reaching: smartphones and wearables can integrate them for offline voice assistance and predictive typing; customer support systems can rely on them for instant, domain-specific problem-solving without cloud costs; education platforms can deploy them as personal AI tutors for students on tablets; IoT and edge devices can use them for natural interactions in smart homes, factories, or autonomous systems; and even developing regions with limited connectivity can benefit from AI tools once restricted to high-resource environments. Despite their many strengths, Micro LLMs also face challenges that cannot be ignored—they have a performance gap compared to massive LLMs in terms of deep reasoning, long-context understanding, and open-ended creativity; they may inherit biases from training data but lack the advanced safety layers larger models employ; they often have smaller context windows, which restricts their ability to process long documents or sustain extended conversations; and because they are sometimes fine-tuned narrowly for specific domains, they may perform poorly when taken outside their area of expertise. Yet these challenges are increasingly being addressed with ongoing innovation. The future of Micro LLMs is especially promising: we are likely to see hybrid systems where micro models handle local, everyday tasks while complex queries are offloaded to cloud-based giants, giving users the best of both worlds; specialized domain-focused Micro LLMs will become standard tools in fields like law, medicine, and finance, offering expert-level assistance in lightweight form; federated learning will enable decentralized improvement of models across devices while protecting user privacy; and as consumer hardware continues to integrate AI chips and neural processing units (NPUs), Micro LLMs will be embedded into nearly every device we own—from augmented reality glasses to household appliances—ushering in a world where AI becomes ambient and seamless. The rise of Micro LLMs signals a broader efficiency movement in AI, where “bigger is not always better” but instead “smarter and leaner” becomes the winning formula. They are not just scaled-down versions of giant models but a new class of AI altogether, defined by efficiency, accessibility, and adaptability. In conclusion, Micro LLMs prove that artificial intelligence does not need to be massive to be mighty—by bridging the gap between cutting-edge research and real-world usability, they are set to empower individuals, businesses, and societies in ways that were unimaginable just a few years ago, making AI not just a tool for the privileged few but a practical, sustainable, and ubiquitous companion for everyone.

Micro LLMs, or Micro Large Language Models, represent a remarkable evolution in artificial intelligence, providing a new perspective on how language models can be deployed efficiently and effectively in real-world scenarios without the immense computational, financial, and energy costs associated with giant LLMs like GPT-4, Claude, or Google’s Gemini, which often contain hundreds of billions to trillions of parameters and require data-center-scale hardware to operate optimally, making them inaccessible to many businesses, researchers, and individual developers who cannot afford such infrastructure, and this is where Micro LLMs step in, offering a compact yet powerful alternative that balances performance with accessibility, usually ranging from hundreds of millions to a few billion parameters, and yet still capable of natural language understanding, reasoning, and generation at a level that satisfies most practical applications, which is achieved through a combination of advanced optimization and model compression techniques including quantization, where model weights are represented using lower-precision formats such as 8-bit or even 4-bit instead of traditional 32-bit floating-point values, significantly reducing memory footprint and computational demand without substantial loss of accuracy, pruning, which systematically removes redundant neurons or connections that contribute little to overall performance, knowledge distillation, where a smaller "student" model is trained to replicate the behavior of a larger "teacher" model, capturing essential knowledge while remaining lightweight, and architectural innovations that focus on efficient attention mechanisms and transformer design, allowing these models to handle tasks with remarkable speed and reduced latency, and because of these optimizations, Micro LLMs can be deployed on devices ranging from standard laptops and desktops to smartphones, tablets, and even embedded edge devices, enabling offline processing and real-time AI applications, which in turn addresses key concerns related to privacy, data security, and responsiveness, since sensitive user data no longer needs to be transmitted to cloud servers, reducing risk while improving the user experience, and this democratization of AI brings sophisticated language capabilities to smaller enterprises, startups, educational institutions, and even individual users, who can now access, customize, and deploy AI tools without prohibitive costs, making AI more inclusive and widely applicable across industries such as healthcare, finance, education, customer service, and industrial IoT, for instance, hospitals can use Micro LLMs on local servers to analyze patient records or assist with diagnostics while keeping data fully private, educational apps can provide personalized tutoring and homework assistance without requiring constant internet access, customer service departments can implement efficient, responsive chatbots tailored to specific domains, and smart home devices or industrial sensors can leverage these compact models to understand and respond to voice commands or environmental inputs, all while consuming far less energy than large-scale LLMs, which is a crucial consideration in the current context of climate-conscious technology development, and the performance of Micro LLMs, while not matching the deep reasoning or creative generative capabilities of trillion-parameter models, is still highly impressive in domain-specific applications or general-purpose conversational AI, especially when models are fine-tuned with specialized datasets, allowing them to excel in contextually relevant tasks, and real-world examples of Micro LLMs include Meta’s LLaMA 2–7B, fine-tuned variants like Alpaca and Vicuna, Microsoft’s Phi-2, Mistral-7B, and TinyLlama, all of which demonstrate that small-scale models can be surprisingly powerful, often rivaling much larger models in speed and efficiency while maintaining sufficient accuracy for most practical purposes, and the advantages extend beyond hardware efficiency and cost savings, including dramatically lower latency due to on-device processing, enhanced adaptability for specific tasks, ability to be deployed in regions with poor or intermittent internet connectivity, and improved environmental sustainability through reduced electricity consumption, although challenges remain, such as handling long-context documents or extended conversations due to limited context windows, mitigating biases inherited from training data, and ensuring that domain-specific Micro LLMs do not fail catastrophically when faced with tasks outside their training scope, yet researchers continue to address these limitations through hybrid approaches, such as combining Micro LLMs for edge or on-device tasks with cloud-based larger models for more complex reasoning, as well as federated learning paradigms that allow devices to collaboratively improve models while maintaining data privacy, and looking ahead, the trajectory for Micro LLMs is promising, with potential for increasingly specialized models tailored to industries like law, medicine, and finance, integration into consumer devices ranging from augmented reality glasses to smart appliances, and widespread use in education, healthcare, customer support, and industrial automation, effectively making AI a ubiquitous companion in daily life, emphasizing that bigger is not always better in artificial intelligence, and that smarter, leaner, and highly optimized models can provide meaningful, accessible, and sustainable AI solutions to a wider audience, and in conclusion, Micro LLMs epitomize the concept of being small but mighty, bridging the gap between advanced AI capabilities and real-world usability, enabling individuals and organizations to harness language models in ways that are cost-effective, environmentally friendly, private, and practical, and while they may not yet replace the largest models in all tasks, their growing sophistication, efficiency, and adaptability indicate that the future of AI will be defined not only by scale but by the ability to deliver maximum utility with minimal resource expenditure, effectively transforming how we interact with, deploy, and benefit from artificial intelligence in everyday life.

Conclusion

Micro LLMs are not just a scaled-down version of large models—they are a new class of AI designed for efficiency, privacy, and widespread adoption. Their potential lies in bridging the gap between cutting-edge AI research and real-world usability. As the technology matures, we may find that the next AI revolution doesn’t come from trillion-parameter behemoths, but from compact, specialized, and accessible Micro LLMs running in the palm of our hands.

Q&A Section

Q1 :- What are Micro LLMs?

Ans:- Micro LLMs are small, efficient versions of large language models, typically ranging from hundreds of millions to a few billion parameters, designed to run on devices with limited hardware while still delivering strong language capabilities.

Q2 :- Why are Micro LLMs important?

Ans:- They make AI more accessible, cost-effective, and energy-efficient, allowing businesses, developers, and individuals to use advanced AI without requiring massive cloud infrastructure.

Q3 :- How do Micro LLMs achieve efficiency?

Ans:- Through techniques like quantization, pruning, knowledge distillation, and optimized architectures that reduce size while maintaining performance.

Q4 :- What are some applications of Micro LLMs?

Ans:- They are used in smartphones, customer service chatbots, healthcare decision support, education tools, IoT devices, and regions with limited internet access.

Q5 :- What are the challenges of Micro LLMs?

Ans:- They face limitations in reasoning power, context handling, domain generalization, and bias mitigation compared to large LLMs.

Similar Articles

Find more relatable content in similar Articles

Digital DNA: The Ethics of Gen..

Digital DNA—the digitization a.. Read More

Data Centers and the Planet: M..

As cloud computing becomes the.. Read More

Protecting Kids in the Digital..

In an increasingly connected w.. Read More

Wearable Health Sensors: The D..

Wearable health sensors are re.. Read More

Explore Other Categories

Explore many different categories of articles ranging from Gadgets to Security

Smart Devices, Gear & Innovations

Discover in-depth reviews, hands-on experiences, and expert insights on the newest gadgets—from smartphones to smartwatches, headphones, wearables, and everything in between. Stay ahead with the latest in tech gear

Apps That Power Your World

Explore essential mobile and desktop applications across all platforms. From productivity boosters to creative tools, we cover updates, recommendations, and how-tos to make your digital life easier and more efficient.

Tomorrow's Technology, Today's Insights

Dive into the world of emerging technologies, AI breakthroughs, space tech, robotics, and innovations shaping the future. Stay informed on what's next in the evolution of science and technology.

Protecting You in a Digital Age

Learn how to secure your data, protect your privacy, and understand the latest in online threats. We break down complex cybersecurity topics into practical advice for everyday users and professionals alike.

© 2025 Copyrights by rTechnology. All Rights Reserved.