Deepfake Threats in 2025: How to Protect Yourself Online.

"In 2025, deepfake technology has advanced to the point where fake videos, voices, and images are almost indistinguishable from reality, posing serious threats to privacy, security, and truth itself. This article explores the growing risks, real-world cases, and practical steps you can take to detect and protect yourself from AI-driven deception."

✨ Raghav Jain

Introduction

In 2025, deepfake technology is no longer just a fascinating experiment in artificial intelligence—it’s a full-blown societal challenge. Originally developed to create realistic face-swaps for entertainment and film, deepfakes have evolved into a sophisticated tool capable of mimicking voices, replicating facial expressions, and even generating entire videos indistinguishable from real ones.

While deepfakes can be harmless in creative fields like movie production, gaming, or marketing, they also present significant threats. Cybercriminals, political propagandists, and scammers are exploiting the technology to deceive, manipulate, and steal. In this digital landscape, protecting yourself online means staying informed, recognizing the warning signs, and adopting proactive security habits.

What Are Deepfakes?

A deepfake is a synthetic piece of media—video, audio, or image—created using deep learning algorithms and generative adversarial networks (GANs). These AI models are trained on massive amounts of real-life data to produce near-perfect imitations of someone’s appearance or voice.

Key characteristics:

- Hyper-realistic visuals: Facial expressions, lip movements, and gestures match perfectly.

- Synthetic voice cloning: Speech patterns and tone mimic the target’s natural speaking style.

- Context manipulation: Backgrounds, lighting, and environment can be changed to create fake scenarios.

Why Deepfake Threats Are More Serious in 2025

- Technology Has Become Accessible

- In 2020, deepfake creation required advanced programming skills.

- In 2025, easy-to-use mobile apps and websites allow anyone to generate realistic deepfakes within minutes.

- Higher Accuracy, Lower Detection Rates

- AI-generated faces and voices are now so advanced that even trained analysts sometimes struggle to spot fakes.

- Automated detection tools are in a constant arms race with deepfake generators.

- Integration with Other Cyber Threats

- Deepfakes are now combined with phishing, identity theft, and social engineering scams, making them harder to detect and more dangerous.

Real-World Deepfake Incidents

1. CEO Voice Scam

In late 2024, a finance officer at a multinational company received a call from what sounded exactly like the CEO’s voice, instructing them to transfer $20 million to a supplier. It turned out to be a deepfake audio generated from recordings found online.

2. Political Disinformation

During an election campaign in Europe, a deepfake video of a candidate making offensive remarks went viral before fact-checkers could intervene, causing severe damage to public trust.

3. Revenge Deepfakes

Cybercriminals have used deepfakes to place individuals in explicit or compromising situations, leading to blackmail, harassment, and reputational harm.

The Main Threats of Deepfakes

1. Identity Theft

Deepfakes can replicate your face or voice for fraudulent activities, from opening bank accounts to bypassing biometric security.

2. Financial Fraud

Cybercriminals use deepfaked voices to trick employees into authorizing wire transfers or providing sensitive information.

3. Political Manipulation

Deepfake videos can be used to spread misinformation, sway public opinion, or incite unrest.

4. Reputational Damage

A fake video showing you in a compromising situation can ruin personal relationships, careers, and social standing.

5. Psychological Manipulation

Deepfakes can influence how people perceive truth, leading to confusion, mistrust, and increased polarization.

How to Spot a Deepfake in 2025

Even with advanced AI, deepfakes often leave behind subtle clues:

- Unnatural Eye Movement – Deepfake subjects sometimes blink irregularly or have a glassy, fixed stare.

- Lighting Mismatch – Shadows or highlights may not match the environment.

- Lip-Sync Errors – Slight mismatches between spoken words and lip movement.

- Skin Texture and Artifacts – Blurring, smudging, or unnatural smoothness in certain facial areas.

- Inconsistent Background Sounds – Audio tone or ambient noises that don’t match the visual setting.

Protecting Yourself from Deepfake Threats

1. Limit Public Exposure of Personal Media

- Restrict posting high-resolution videos or audio clips of yourself online.

- Use privacy settings on social media to limit access to your photos and videos.

2. Use Strong Authentication

- Avoid relying solely on facial or voice recognition for sensitive accounts.

- Use multi-factor authentication (MFA) with strong passwords and authenticator apps.

3. Verify Before Trusting

- If you receive unusual requests from a known contact (especially involving money or sensitive data), confirm via another channel before acting.

4. Stay Informed

- Follow cybersecurity news and government advisories.

- Learn about the latest deepfake detection tools, like Microsoft Video Authenticator or Deepware Scanner.

5. Use AI Detection Tools

- Run suspicious videos through AI deepfake detectors that analyze frame inconsistencies and voice patterns.

6. Legal Action

- Many countries in 2025 have introduced laws criminalizing malicious deepfake use. If you’re targeted, report it to cybercrime authorities.

The Role of Governments and Tech Companies

- Policy Making – Governments are drafting strict regulations against deepfake misuse, requiring watermarking of AI-generated content.

- AI Detection – Big tech firms are investing in advanced detection algorithms and integrating them into social media platforms.

- Public Awareness Campaigns – National campaigns are educating citizens about digital literacy and online verification practices.

Ethical Uses of Deepfakes

Not all deepfakes are harmful. Ethical applications include:

- Film & Gaming – Reviving historical figures or creating realistic CGI characters.

- Language Localization – Synchronizing mouth movements for dubbed movies.

- Education – Simulating historical events or delivering interactive training content.

The challenge is ensuring these uses are transparent and well-regulated.

The Future: What’s Next?

By 2027, experts predict that almost 50% of online video content could be AI-generated. This means detection technologies and public awareness will be more important than ever. Blockchain-based authentication, mandatory AI watermarks, and government verification systems may become the standard to preserve trust in digital media.

In 2025, deepfake technology has transformed from a niche AI experiment into a powerful force capable of producing hyper-realistic fake videos, images, and audio that are nearly impossible to distinguish from reality, posing significant risks to personal security, political stability, and public trust; built using deep learning algorithms and generative adversarial networks (GANs), deepfakes can perfectly mimic someone’s face, voice, and gestures, allowing them to be used for both harmless entertainment, like in movies and gaming, and malicious activities such as fraud, identity theft, misinformation, blackmail, and revenge; the threat is more serious now than ever before because creating a convincing deepfake no longer requires advanced technical skills—free and paid apps let anyone generate them within minutes—while detection technologies are struggling to keep pace, leading to incidents such as the 2024 CEO voice scam where criminals cloned an executive’s voice to authorize a $20 million transfer, or political disinformation campaigns where fake videos of candidates making offensive remarks went viral before fact-checkers could respond, or revenge deepfakes that place innocent people in compromising situations to extort money or damage reputations; the main dangers include identity theft (where criminals use your face or voice to bypass biometric security), financial fraud (tricking employees into sending money), political manipulation (influencing elections and public opinion), reputational damage (destroying careers and personal relationships), and psychological manipulation (making people doubt reality itself), and while the most advanced fakes are seamless, even in 2025 they can sometimes be spotted by subtle signs such as unnatural eye movement, mismatched lighting, imperfect lip-sync, odd skin textures, or inconsistent background audio; protecting yourself requires a multi-layered approach—limit the posting of high-quality videos or audio clips of yourself online, use privacy settings to control who can see your media, avoid relying solely on facial or voice recognition for security by enabling multi-factor authentication with strong passwords and authenticator apps, verify unusual requests through separate communication channels, stay informed about new scams and detection tools like Microsoft Video Authenticator or Deepware Scanner, and when possible, run suspicious media through AI detectors to analyze inconsistencies; governments and tech companies are stepping up efforts by passing laws against malicious deepfakes, mandating AI-generated content watermarking, funding advanced detection systems, and launching public awareness campaigns to promote digital literacy, while ethical uses—such as reviving historical figures in education, improving film dubbing, or creating immersive virtual simulations—still show the technology’s creative potential when used transparently and with consent; however, with experts predicting that nearly half of all online videos by 2027 could be AI-generated, the line between truth and fiction will blur further, making critical thinking, verification habits, and authentication systems more vital than ever; in short, deepfakes are here to stay, and while their dangers are escalating in scale and sophistication, awareness, vigilance, and the use of protective technologies can still empower individuals to navigate the online world safely without falling victim to AI-driven deception.

In 2025, deepfake technology has evolved into one of the most pressing cybersecurity threats, moving far beyond its early experimental stage to become a powerful tool capable of creating audio, video, and images so realistic that even experts sometimes struggle to distinguish them from genuine recordings, and while the technology—built using deep learning algorithms and generative adversarial networks (GANs)—can serve creative and harmless purposes in entertainment, gaming, education, or advertising, it has also become a dangerous weapon in the hands of cybercriminals, scammers, political manipulators, and blackmailers; unlike a few years ago when creating a convincing deepfake required advanced technical knowledge and expensive equipment, in 2025 user-friendly apps and online platforms allow almost anyone to generate realistic fake videos or voice recordings within minutes, leading to a dramatic increase in incidents where these AI-generated forgeries are used to harm individuals, companies, or entire societies, as seen in real-world examples like the 2024 “CEO voice” scam where fraudsters cloned an executive’s voice to trick a finance officer into transferring $20 million, political disinformation campaigns where deepfake videos of candidates making offensive or incriminating statements spread like wildfire before fact-checkers could debunk them, and revenge deepfakes where innocent individuals’ faces are placed in compromising or explicit scenarios to destroy reputations or extort money; the dangers are multifaceted—deepfakes can facilitate identity theft by replicating someone’s face or voice to bypass biometric security, enable financial fraud by imitating trusted voices to authorize wire transfers, spread political manipulation and misinformation that undermine democracy, inflict reputational damage that can ruin personal and professional lives, and even create psychological manipulation on a societal scale by making people question the very idea of truth, which is why spotting and combating them has become a critical skill; though AI-generated content is getting harder to detect, some subtle giveaways still exist, such as unnatural eye movements or blinking patterns, mismatches between lighting and shadows, slight lip-sync errors, odd skin textures or smoothing, and background noises that do not align with the visual environment, but because creators are constantly improving the realism of deepfakes, detection tools and awareness campaigns must evolve at the same pace; protecting yourself in this environment requires adopting proactive strategies such as limiting the amount of high-quality personal media—especially videos and voice recordings—you share publicly, tightening privacy settings on social media to control who can view or download your content, avoiding overreliance on facial or voice recognition as the sole means of authentication by implementing multi-factor authentication (MFA) with strong, unique passwords and authenticator apps, verifying any unusual or urgent requests—particularly those involving financial transactions—through a separate communication channel before taking action, staying informed about emerging threats through credible cybersecurity sources, and leveraging deepfake detection tools like Microsoft Video Authenticator, Sensity AI, or Deepware Scanner to analyze suspicious media; on a broader scale, governments and technology companies in 2025 are responding to the deepfake crisis through new laws that criminalize malicious use, mandate the watermarking or labeling of AI-generated content, and invest in advanced detection algorithms integrated directly into social media and messaging platforms, while public awareness campaigns promote digital literacy and encourage citizens to question, verify, and fact-check online media before believing or sharing it; despite the dangers, deepfakes are not inherently bad—there are ethical and innovative applications, such as reviving historical figures for educational purposes, creating lifelike virtual assistants, producing more natural lip-synced translations in film dubbing, or crafting immersive experiences in virtual reality training programs, but these uses must be transparent, consensual, and carefully regulated to prevent abuse; the future will likely bring even greater challenges as experts predict that by 2027 nearly half of all video content online could be AI-generated, blurring the line between authentic and synthetic media to a degree that will make verification and trust even harder to establish, which means individuals will need to develop strong critical thinking skills, adopt verification habits, and embrace security technologies that authenticate content before it is consumed or shared; ultimately, deepfakes represent both a technological marvel and a societal hazard, and while their threats are real and growing more sophisticated by the year, individuals who stay informed, apply layered security measures, verify sources, and use detection tools will be far better equipped to navigate a digital landscape where seeing and hearing something is no longer proof that it is real, proving that vigilance and digital literacy are now as essential as passwords in protecting one’s identity, finances, and reputation online.

Conclusion

Deepfakes are here to stay, but so are the tools and strategies to fight them. In 2025, protecting yourself means thinking critically about what you see and hear online, verifying before acting, and using advanced authentication methods. The digital world may be getting more deceptive, but with vigilance and the right technology, individuals can still maintain control over their identities and security.

Q&A Section

Q1 :- What is a deepfake?

Ans :- A deepfake is a highly realistic AI-generated video, image, or audio that mimics a person’s appearance or voice using deep learning algorithms.

Q2 :- Why are deepfakes more dangerous in 2025?

Ans :- Because the technology is more accurate, easier to access, and harder to detect, making it a powerful tool for fraud, misinformation, and identity theft.

Q3 :- How can I spot a deepfake?

Ans :- Look for signs like unnatural eye movement, lighting mismatches, lip-sync errors, blurred textures, and inconsistent background sounds.

Q4 :- Can deepfakes be used legally?

Ans :- Yes, when used ethically for purposes like movies, education, or translation, and with clear disclosure that the content is AI-generated.

Q5 :- What’s the best way to protect myself from deepfakes?

Ans :- Limit personal media exposure, use multi-factor authentication, verify unusual requests, and stay informed about detection tools and cybersecurity updates.

Similar Articles

Find more relatable content in similar Articles

Data Centers and the Planet: M..

As cloud computing becomes the.. Read More

Protecting Kids in the Digital..

In an increasingly connected w.. Read More

Digital DNA: The Ethics of Gen..

Digital DNA—the digitization a.. Read More

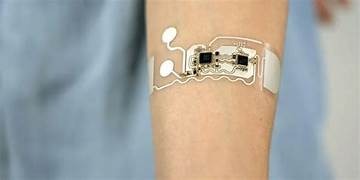

Wearable Health Sensors: The D..

Wearable health sensors are re.. Read More

Explore Other Categories

Explore many different categories of articles ranging from Gadgets to Security

Smart Devices, Gear & Innovations

Discover in-depth reviews, hands-on experiences, and expert insights on the newest gadgets—from smartphones to smartwatches, headphones, wearables, and everything in between. Stay ahead with the latest in tech gear

Apps That Power Your World

Explore essential mobile and desktop applications across all platforms. From productivity boosters to creative tools, we cover updates, recommendations, and how-tos to make your digital life easier and more efficient.

Tomorrow's Technology, Today's Insights

Dive into the world of emerging technologies, AI breakthroughs, space tech, robotics, and innovations shaping the future. Stay informed on what's next in the evolution of science and technology.

Protecting You in a Digital Age

Learn how to secure your data, protect your privacy, and understand the latest in online threats. We break down complex cybersecurity topics into practical advice for everyday users and professionals alike.

© 2025 Copyrights by rTechnology. All Rights Reserved.