Edge AI vs Cloud AI: Which One Is Dominating in 2025?

In 2025, Edge AI and Cloud AI are shaping the future of intelligent computing—each dominating its own realm. While Edge AI excels in real-time, privacy-sensitive, and low-latency tasks, Cloud AI powers large-scale analytics and deep learning models. This article explores their differences, strengths, and how a hybrid approach is redefining dominance in the AI ecosystem.

✨ Raghav Jain

Introduction

As artificial intelligence (AI) continues to evolve at a staggering pace, the debate between Edge AI and Cloud AI has intensified. Both paradigms offer immense possibilities, yet their applicability varies greatly depending on the use case, latency demands, security requirements, and scalability. By 2025, technological advancements and increasing adoption across industries have shaped this contest into a more defined battlefield. This article delves deep into the distinctions, advantages, limitations, and current dominance of Edge AI and Cloud AI in 2025.

Understanding the Basics

What is Edge AI?

Edge AI refers to the deployment of artificial intelligence algorithms directly on devices such as smartphones, IoT sensors, cameras, and drones — essentially, closer to where the data is generated. The “edge” implies computing at the source without relying heavily on centralized cloud systems.

What is Cloud AI?

Cloud AI refers to running AI models on powerful cloud-based infrastructures hosted by platforms like AWS, Microsoft Azure, or Google Cloud. These systems centralize data processing and leverage vast compute power to train and deploy complex AI models.

Core Differences Between Edge AI and Cloud AI

Feature Edge AI Cloud AI Latency Ultra-low; real-time processing Higher latency due to data travel Connectivity Works offline or with intermittent access Requires constant internet connection Power Consumption Optimized for low-power devices High power due to centralized processing Data Privacy High – data stays local Lower – data is sent to external servers Scalability Limited by device capabilities Highly scalable Cost Lower operating cost per device Higher due to data transfer and compute Rise of Edge AI in 2025

Edge AI has seen explosive growth in 2025, especially in sectors requiring real-time decision-making, such as:

- Autonomous vehicles: Where milliseconds matter

- Industrial automation: For instant fault detection

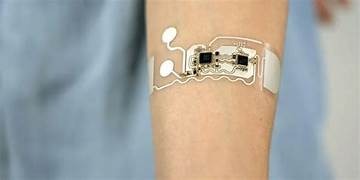

- Healthcare wearables: Enabling patient monitoring without latency

- Smartphones and AR devices: For privacy-sensitive, real-time feedback

Major chip manufacturers like NVIDIA, Qualcomm, and Intel have released specialized AI chips designed for edge computing, reducing the power and size footprint dramatically. These chips enable devices to run complex deep learning models with surprising efficiency.

Edge AI: Driving Forces in 2025

- 5G and Beyond: Reduced latency in network communications enhances edge computing even further.

- Privacy Regulations: Stringent global data protection laws (like GDPR 2.0) push for more localized data handling.

- Sustainability Goals: Edge devices consume less power compared to always-on cloud communication.

Cloud AI’s Unmatched Strength in 2025

Despite the edge revolution, Cloud AI remains a powerhouse — especially in training large-scale models and managing big data pipelines.

Key Areas Where Cloud AI Leads:

- Natural Language Processing (NLP): For large-scale models like GPT or Gemini

- Enterprise-level analytics: Processing petabytes of data

- AI-as-a-Service platforms: Enabling startups to access powerful tools

- Training of Foundation Models: Large language models and multimodal AI are still trained in cloud due to resource requirements

Cloud providers have also embraced hybrid AI models, combining edge processing for inference and cloud platforms for retraining and updates.

Hybrid AI Architectures: The Best of Both Worlds

2025 is witnessing a shift toward hybrid AI — a combination where:

- Edge handles inference (real-time decision-making)

- Cloud manages training, orchestration, and updates

This model has gained traction in:

- Smart cities

- Retail analytics

- Remote healthcare

- Precision agriculture

For instance, a drone may process crop data on-site (edge inference), but upload summaries to the cloud for seasonal trend analysis.

Edge AI vs Cloud AI Market Share in 2025

According to recent market reports:

- Edge AI market is valued at $32.5 billion in 2025, growing at a CAGR of 21%

- Cloud AI market is valued at $175 billion in 2025, growing at a CAGR of 18%

While cloud AI remains larger in raw numbers, Edge AI is growing faster, particularly in consumer and industrial applications. AI chip sales for edge applications have increased by 44% YoY.

Challenges in Each Approach

Edge AI Challenges

- Limited hardware capacity for large models

- Fragmented deployment environments

- Complex update mechanisms

Cloud AI Challenges

- Data privacy and ownership concerns

- High operational costs for continuous streaming

- Latency issues in real-time use cases

Real-World Examples in 2025

Edge AI in Action:

- Apple Vision Pro: Uses on-device AI for gesture recognition

- Tesla’s Autopilot: Processes images directly through onboard AI chips

- Smart surveillance: Cameras identify threats locally without cloud dependency

Cloud AI in Action:

- Amazon Personalize: Real-time product recommendations powered by cloud

- ChatGPT or Gemini Pro: Runs on large-scale GPU clusters

- SAP and Salesforce AI: Processing enterprise-wide data for predictions

Security and Privacy Concerns

In an age where AI governance is becoming a global issue, Edge AI offers enhanced control. Data stored and processed locally is less prone to interception or misuse. Governments and healthcare providers especially favor edge for sensitive data.

However, Cloud AI vendors have made strides with confidential computing, end-to-end encryption, and zero-trust architectures to address security concerns.

Developer and Ecosystem Support in 2025

- Edge AI Frameworks: TensorFlow Lite, ONNX Runtime, OpenVINO, MediaPipe

- Cloud AI Platforms: AWS SageMaker, Google Vertex AI, Azure Machine Learning

Edge AI is also supported by growing ML Ops for edge and AI model compression techniques like pruning and quantization.

The Verdict: Who's Dominating in 2025?

In terms of ubiquity and influence, Edge AI is rapidly dominating sectors like manufacturing, autonomous mobility, and healthcare wearables. The number of deployed edge devices is expected to cross 75 billion by end of 2025.

Yet, Cloud AI continues to dominate in raw computational capacity, training, and enterprise intelligence applications. No single winner — both are co-dominating in their respective domains, with hybrid models becoming the new normal.

In 2025, the debate between Edge AI and Cloud AI has matured from a futuristic concept into a critical decision point for industries navigating artificial intelligence deployments at scale. Both paradigms represent distinct approaches to data processing and intelligent decision-making, but their relevance and impact vary considerably depending on factors such as latency, data privacy, computational intensity, energy efficiency, and overall application environment. Edge AI, which processes data directly on local devices—be it smartphones, wearables, industrial robots, surveillance cameras, or autonomous vehicles—has seen tremendous traction due to its ability to deliver ultra-low latency and function even in scenarios with limited or no internet connectivity. This decentralized model allows AI inference to occur close to the data source, reducing the need to transmit sensitive information to external servers, a feature that is increasingly valuable amid rising concerns around data privacy, security breaches, and regional data compliance regulations such as GDPR 2.0 and India's Digital Personal Data Protection Act. On the other hand, Cloud AI remains the backbone of large-scale artificial intelligence systems that require massive processing power for model training, orchestration, and deployment. Platforms like Amazon Web Services (AWS), Microsoft Azure, and Google Cloud have continued to dominate the AI training landscape, enabling organizations to fine-tune large foundation models such as ChatGPT, Gemini, and Meta’s LLaMA, which require billions of parameters and enormous datasets that simply cannot be handled by edge hardware. Edge AI excels when fast decision-making is essential—for example, in autonomous vehicles where the delay of even a few milliseconds could mean the difference between safety and disaster; in healthcare devices where real-time vital sign monitoring must occur even in disconnected environments; and in smart factories where machinery needs to react instantly to detected faults without waiting for cloud instructions. Cloud AI, conversely, is indispensable in areas like fraud detection, recommendation engines, natural language processing, financial forecasting, and enterprise resource planning, where extensive historical data and powerful models are necessary to generate meaningful insights. While Edge AI is constrained by the computational and memory limits of embedded systems, rapid advancements in chip design—led by companies like NVIDIA (Jetson Nano), Qualcomm (Snapdragon Neural Processing Units), and Intel (Movidius)—have narrowed this gap significantly, enabling edge devices to support quantized versions of deep neural networks and run them with impressive accuracy and speed. At the same time, cloud infrastructure is becoming more specialized with custom AI accelerators like Google’s TPU and AWS Inferentia chips, which offer high performance at lower costs. Interestingly, the future does not lie in one model replacing the other, but in hybrid AI architectures that intelligently distribute workloads between the edge and the cloud. In these systems, the edge handles immediate inference tasks—like object recognition in a drone’s camera feed—while the cloud is responsible for periodic retraining of models based on aggregated edge data, updates, coordination between multiple nodes, and overarching analytics. This hybrid approach ensures that systems are both responsive and intelligent, combining the best of real-time action and long-term insight. For instance, in precision agriculture, edge-enabled drones monitor soil conditions and plant health in real time while uploading periodic reports to the cloud for trend analysis, helping farmers make strategic decisions about crop rotation or irrigation. In retail, smart shelves equipped with edge AI can track inventory movements and customer behavior instantly, while the cloud processes this data to optimize supply chains and promotions across all stores. According to recent market analysis, while the global Cloud AI market is valued at approximately $175 billion in 2025 with strong enterprise adoption and a CAGR of 18%, the Edge AI market—though smaller in value at around $32.5 billion—is growing at a faster rate of 21% CAGR, reflecting the surging demand for decentralized intelligence across industries. One major advantage driving this growth is energy efficiency; edge devices are optimized for lower power consumption compared to always-on cloud communication, aligning with global sustainability goals and reducing infrastructure burden. However, challenges remain: Edge AI faces difficulties in model updates, hardware fragmentation, and managing inference reliability across diverse conditions, while Cloud AI struggles with data ownership concerns, higher latency, and bandwidth costs in applications requiring continuous streaming. Notably, Edge AI is leading in industries like automotive, defense, healthcare, and consumer electronics, where local processing and privacy are paramount. Cloud AI continues to dominate in sectors like finance, insurance, enterprise software, and scientific research. Importantly, both types of AI now benefit from a growing ecosystem of developer tools—TensorFlow Lite, ONNX, MediaPipe, and OpenVINO for edge; AWS SageMaker, Azure ML Studio, and Google Vertex AI for cloud—which simplifies the deployment and maintenance of intelligent systems. Moreover, AI governance frameworks emerging in 2025 increasingly favor edge-based processing due to its controllability and ability to align with ethical AI principles. Innovations like federated learning, where models are trained across decentralized data sources without raw data leaving local devices, have further strengthened the case for Edge AI. Cloud AI, meanwhile, has responded with enhanced encryption techniques, confidential computing, and fine-grained access control to mitigate security and compliance concerns. The result is a new AI era in 2025 where dominance is defined not by a single paradigm, but by contextual superiority—Edge AI dominates the physical world where immediacy, autonomy, and privacy are crucial, while Cloud AI rules the digital world of deep insights, scalability, and knowledge creation. This complementary dynamic is reshaping everything from smart cities to personal devices and enterprise platforms. The most successful AI strategies of 2025 are those that don’t choose one over the other but instead embrace the synergy between edge and cloud, treating them not as rivals but as collaborative forces in a distributed intelligence ecosystem.

In the year 2025, the evolving landscape of artificial intelligence has given rise to a fascinating dichotomy between two dominant paradigms—Edge AI and Cloud AI—each claiming its own territory in the vast technological ecosystem. These two models represent fundamentally different approaches to AI deployment, processing, and scalability, and understanding their roles is crucial to grasp the future of AI-driven innovation. Edge AI refers to running AI algorithms locally on hardware devices such as smartphones, cameras, autonomous vehicles, drones, or industrial machines, rather than relying on centralized cloud servers. By processing data at or near the source where it is generated, Edge AI minimizes latency, enhances privacy, and reduces the dependency on constant internet connectivity. This decentralized model has rapidly gained traction in 2025, particularly in sectors demanding real-time responses, including autonomous transportation, remote healthcare monitoring, smart surveillance, and manufacturing automation. Devices powered by Edge AI can make decisions in milliseconds, which is critical in time-sensitive scenarios like self-driving cars navigating through traffic or a wearable health device detecting abnormal heart rhythms and sending alerts instantly. On the other hand, Cloud AI still holds a pivotal position in the development and deployment of large-scale AI systems, especially those involving massive datasets, complex computations, and long-term analytics. Cloud-based AI relies on centralized data centers—hosted by giants like Amazon Web Services, Google Cloud, and Microsoft Azure—that provide vast computational power and storage to train, deploy, and maintain sophisticated models such as generative AI systems, enterprise analytics tools, and predictive engines used in finance, retail, and logistics. These systems often require access to petabytes of data and benefit from the high scalability and collaborative capabilities that the cloud inherently offers. The distinction, however, is not just in architecture but also in purpose; Edge AI is ideal for inference tasks that need to be fast, private, and operational even in disconnected environments, while Cloud AI is better suited for model training, heavy data processing, and orchestrating AI services across multiple endpoints. Interestingly, 2025 has seen the rise of hybrid AI architectures that blur this line, where edge devices perform inference locally and periodically sync with cloud services for updates, retraining, and global coordination, thereby leveraging the strengths of both systems. For instance, in a smart agriculture setup, drones equipped with Edge AI analyze crop health in real-time while uploading summarized data to cloud platforms that provide seasonal predictions and large-scale resource management strategies. Similarly, in retail, smart shelves and checkout systems use Edge AI to monitor customer interactions and stock levels instantly, whereas Cloud AI manages backend analytics, customer profiling, and inventory forecasts across thousands of locations. While Cloud AI remains dominant in terms of raw market size, valued at approximately $175 billion in 2025, Edge AI is catching up rapidly, with a global market valuation exceeding $32.5 billion and growing at a faster CAGR due to increasing demand in mobile, industrial, and embedded applications. This acceleration is fueled by breakthroughs in chip design, including low-power, high-efficiency AI accelerators from NVIDIA (Jetson series), Qualcomm (Snapdragon AI), and Intel (Movidius), allowing for the deployment of complex neural networks on small devices with limited resources. Furthermore, rising global concerns about data sovereignty, bandwidth limitations, and carbon emissions have pushed many organizations toward Edge AI solutions, which offer reduced data transfer and power consumption while ensuring sensitive data remains on the device. Regulations such as the European Union’s GDPR 2.0, California’s CCPA updates, and India’s DPDP Act have all emphasized the need for localized processing and user data control, strengthening the case for Edge AI across regulated industries like healthcare, banking, and government services. Meanwhile, Cloud AI is not standing still; it has evolved to meet modern demands by incorporating confidential computing, federated learning, and serverless AI platforms that offer better privacy, elasticity, and developer experience. Generative AI models such as OpenAI’s GPT-5 or Google’s Gemini Ultra, which power conversational agents, content creation tools, and intelligent business assistants, continue to rely heavily on cloud infrastructure due to their enormous computational requirements. These models require thousands of GPUs working in parallel—something that edge devices are unlikely to accommodate anytime soon—reaffirming the cloud’s irreplaceable role in the AI ecosystem. Another aspect contributing to this dual dominance is the developer and ecosystem support for both environments. In 2025, frameworks like TensorFlow Lite, PyTorch Mobile, ONNX Runtime, and Apple’s CoreML have simplified Edge AI development, while platforms like AWS SageMaker, Google Vertex AI, and Azure ML provide end-to-end tools for managing cloud AI pipelines, from data ingestion to model deployment and monitoring. As industries adapt to an AI-first world, decision-makers are increasingly choosing architecture based on specific goals: low-latency local processing with Edge AI, global scalability with Cloud AI, or a hybrid of both. In autonomous driving, for example, Edge AI handles immediate tasks like obstacle recognition and lane detection, while Cloud AI is used to analyze fleet-wide data trends and improve models over time. In healthcare, diagnostic devices can interpret medical scans locally to provide instant feedback, while anonymized patient data is aggregated in the cloud to enhance medical research. This dual-track approach reflects a mature understanding of AI’s role in society—not as a one-size-fits-all solution, but as a suite of interoperable technologies optimized for different contexts. Edge AI is dominating environments where speed, privacy, and reliability are paramount, while Cloud AI continues to dominate where scale, collaboration, and intelligence depth are essential. As we progress further into 2025, we see a convergence where intelligent systems no longer rely exclusively on edge or cloud, but instead operate fluidly across both, enabling use cases that are more resilient, responsive, and responsible. The ultimate winner, therefore, isn’t Edge AI or Cloud AI in isolation, but the synergy between them, shaping an AI landscape that is both decentralized and interconnected, private yet collaborative, and agile without compromising on power. This balanced coexistence and mutual reinforcement are what define dominance in today’s AI-driven economy—not merely who processes data faster or who stores more, but who can integrate capabilities more intelligently and ethically in a world that demands both immediacy and insight.

Conclusion

- Edge AI is thriving due to real-time capabilities, offline operation, and privacy compliance.

- Cloud AI is unmatched for model training, large-scale data processing, and complex AI services.

- The industry is moving toward hybrid AI solutions to harness the best of both worlds.

- By 2025, Edge AI is dominating the physical world, while Cloud AI continues to dominate the data world.

Q&A Section

Q1:- What is the main difference between Edge AI and Cloud AI?

Ans:- Edge AI processes data locally on the device, while Cloud AI processes data in centralized data centers requiring internet connectivity.

Q2:- Why is Edge AI gaining popularity in 2025?

Ans:- Because of its real-time processing, enhanced privacy, and low latency – especially beneficial in healthcare, automotive, and IoT applications.

Q3:- Is Cloud AI becoming obsolete due to Edge AI?

Ans:- No. Cloud AI is still essential for model training, big data analysis, and large-scale services. Edge AI complements it rather than replaces it.

Q4:- Which industries benefit most from Edge AI?

Ans:- Automotive, manufacturing, healthcare, defense, and smart home technologies benefit greatly from Edge AI’s local processing and real-time capabilities.

Q5:- What is Hybrid AI?

Ans:- Hybrid AI combines Edge and Cloud AI — using edge for inference and cloud for training and coordination — delivering the best performance and scalability.

Similar Articles

Find more relatable content in similar Articles

Data Centers and the Planet: M..

As cloud computing becomes the.. Read More

Protecting Kids in the Digital..

In an increasingly connected w.. Read More

Digital DNA: The Ethics of Gen..

Digital DNA—the digitization a.. Read More

Wearable Health Sensors: The D..

Wearable health sensors are re.. Read More

Explore Other Categories

Explore many different categories of articles ranging from Gadgets to Security

Smart Devices, Gear & Innovations

Discover in-depth reviews, hands-on experiences, and expert insights on the newest gadgets—from smartphones to smartwatches, headphones, wearables, and everything in between. Stay ahead with the latest in tech gear

Apps That Power Your World

Explore essential mobile and desktop applications across all platforms. From productivity boosters to creative tools, we cover updates, recommendations, and how-tos to make your digital life easier and more efficient.

Tomorrow's Technology, Today's Insights

Dive into the world of emerging technologies, AI breakthroughs, space tech, robotics, and innovations shaping the future. Stay informed on what's next in the evolution of science and technology.

Protecting You in a Digital Age

Learn how to secure your data, protect your privacy, and understand the latest in online threats. We break down complex cybersecurity topics into practical advice for everyday users and professionals alike.

© 2025 Copyrights by rTechnology. All Rights Reserved.