The Rise of Humane AI: Will 2025 Be the Year of Emotionally Intelligent Machines?

As AI evolves beyond logic into the realm of emotion, 2025 marks a transformative year where machines are no longer just smart—they're emotionally aware. Humane AI, capable of sensing and responding to human feelings, is revolutionizing healthcare, education, and daily life while raising urgent questions about ethics, privacy, and the true nature of empathy.

✨ Raghav Jain

Introduction

Artificial Intelligence (AI) is no longer a distant dream of sci-fi. In the last decade, it has infiltrated nearly every aspect of our lives—be it virtual assistants, autonomous vehicles, predictive algorithms in healthcare, or personalized shopping experiences. But now, as we step into 2025, a new frontier is emerging: humane AI—machines that are not just smart, but emotionally intelligent. These are systems designed to recognize, understand, and respond to human emotions with empathy and contextual awareness.

Humane AI is not merely a technical achievement; it's a philosophical shift. It represents a transition from AI systems that solve logical problems to those that relate to human beings. From chatbots that comfort the lonely to virtual therapists that understand anxiety, emotionally intelligent machines are poised to redefine the human-machine dynamic. But will 2025 truly be the year of emotionally intelligent AI? Let's explore.

What is Humane AI?

Humane AI refers to the design and deployment of artificial intelligence systems that incorporate emotional intelligence, empathy, ethics, and human-centric values. Unlike traditional AI focused solely on tasks and efficiency, humane AI seeks to:

- Understand human emotions through voice, facial expression, and biometric data.

- Interpret context behind words and actions.

- Respond with appropriate emotional cues—such as sympathy, humor, or encouragement.

- Prioritize human well-being and respect ethical boundaries.

Emotionally intelligent AI systems combine affective computing, natural language processing (NLP), computer vision, and machine learning to emulate the subtleties of human communication.

How Is Emotional Intelligence Engineered in Machines?

Developing emotional intelligence in machines is one of AI’s most complex challenges. Here are the primary components that make this possible:

1. Affective Computing

Coined by Rosalind Picard at MIT, affective computing is the field of AI that focuses on detecting and interpreting emotional states from physiological and behavioral data, such as:

- Facial expression analysis (e.g., smiling, frowning)

- Voice tone modulation

- Eye tracking and gaze detection

- Heart rate and skin temperature

2. Natural Language Understanding (NLU)

Language is often ambiguous. Emotionally intelligent AI uses deep learning models trained on vast corpora of human speech to understand tone, intent, and emotional subtext. This enables machines to pick up sarcasm, humor, and grief—even when not explicitly stated.

3. Multimodal Learning

Human emotion is rarely conveyed through one channel alone. AI systems are increasingly relying on multimodal data—combining speech, facial cues, body language, and context—to provide a holistic emotional analysis.

4. Reinforcement Learning with Human Feedback (RLHF)

RLHF allows machines to learn socially appropriate responses through feedback loops, often guided by human trainers. This makes AI not only emotionally aware but socially adaptive.

Real-World Applications of Humane AI in 2025

The evolution of emotionally intelligent AI has led to its deployment in several critical domains:

1. Healthcare and Mental Wellness

AI therapists like Woebot and Wysa are already supporting millions of users with CBT-based techniques. In 2025, we’re seeing more emotionally attuned AI counselors capable of detecting distress, adjusting tone, and delivering nuanced mental health support—even integrating with biosensors to monitor anxiety levels in real time.

2. Customer Service

Companies like Google, Amazon, and Salesforce have launched empathetic AI bots that adjust their tone and responses based on customer frustration levels. This reduces conflict and improves satisfaction.

3. Education

Emotionally aware tutoring systems can now detect student confusion, boredom, or enthusiasm, adjusting pace and teaching style accordingly. This personalized learning creates a more nurturing educational experience.

4. Companion Robots for the Elderly

Japan and parts of Europe have deployed AI robots in eldercare homes that can recognize emotional needs—offering companionship, reminding patients to take medication, or calling for help during distress.

5. Human Resources

AI is now assisting HR departments in evaluating candidate emotional alignment with company culture by analyzing micro-expressions and speech patterns during interviews.

The Benefits of Emotionally Intelligent AI

1. Enhanced User Trust

When machines understand and respond with empathy, people are more likely to trust and engage with them.

2. Improved Accessibility

Humane AI supports individuals with autism, anxiety, or communication disorders by adapting to their unique emotional patterns.

3. Efficiency with Compassion

AI can now handle tasks like scheduling or customer support while maintaining a tone of warmth and understanding, increasing productivity without sacrificing humanity.

4. Support in Crisis

Emotionally aware systems can recognize mental health emergencies and trigger appropriate interventions, including alerting caregivers or emergency services.

Challenges and Ethical Dilemmas

Despite its promise, humane AI faces significant hurdles:

1. Data Privacy

Emotion recognition often involves biometric data. Who owns this sensitive emotional data? How is it stored? What if it is hacked or misused?

2. Emotional Manipulation

There’s a thin line between empathetic engagement and emotional exploitation. Can AI be used to manipulate consumer behavior or political opinion under the guise of emotional understanding?

3. Bias in Emotional Recognition

Studies have shown that emotion recognition AI can misinterpret emotions across different cultures, genders, or races—leading to biased outcomes.

4. Loss of Human Connection

If people begin to rely on machines for emotional support, will genuine human-to-human relationships diminish?

5. Overdependence and Trust

Blind trust in emotionally intelligent AI could lead to overdependence, especially among vulnerable groups like the elderly or mentally ill.

Are We There Yet? The Current State of Humane AI

While impressive progress has been made, current AI is still not truly emotionally intelligent in the human sense. Most systems mimic emotions based on pattern recognition rather than understanding them intrinsically. Their “empathy” is mechanical, not felt. However, the illusion of empathy, if delivered accurately and ethically, is often enough to make interactions smoother and more comforting.

In 2025, emotionally intelligent AI remains a rapidly maturing field—with startups, tech giants, and governments pouring resources into advancing its capabilities. We are entering the early adoption phase, where emotionally intelligent machines are being deployed in controlled, impactful environments.

Artificial Intelligence has long been hailed for its ability to revolutionize industries through speed, precision, and logic, but 2025 is witnessing a shift beyond computation into the realm of emotion, as the rise of humane AI — machines endowed with emotional intelligence — begins to transform how humans and machines interact on a fundamental level. Unlike traditional AI systems that focus solely on performance optimization and decision-making, emotionally intelligent or "humane" AI is designed to perceive, interpret, and respond to human emotional states using technologies like affective computing, multimodal data analysis, natural language processing (NLP), and reinforcement learning guided by human feedback. These systems recognize facial expressions, vocal tone, gestures, eye movements, and even biometric signals such as heart rate variability to deduce emotional context, enabling them to respond in ways that mimic empathy, compassion, and understanding. In 2025, emotionally intelligent AI is being deployed in several high-impact areas such as healthcare, education, customer support, eldercare, and mental wellness. AI-powered therapy bots like Woebot and Wysa now engage users in emotionally aware dialogues, adapting their tone based on users' stress levels or sadness, offering support through cognitive behavioral therapy techniques. In eldercare, robotic companions capable of recognizing signs of loneliness or distress are increasingly common, especially in countries like Japan where aging populations outpace the caregiving workforce. In education, tutoring systems use emotional cues to detect confusion or frustration, adjusting lesson speed, providing encouragement, or changing teaching strategies to keep students engaged. Similarly, businesses leverage emotionally intelligent AI in customer service to detect customer anger or dissatisfaction and modify responses accordingly to de-escalate conflicts and improve satisfaction. Even hiring processes now employ AI to analyze candidates' micro-expressions, speech tempo, and sentiment to assess personality traits or cultural fit. These examples highlight a growing trend where human-machine interaction is no longer just transactional but relational. The benefits of humane AI are profound: it enhances user trust, creates more inclusive experiences for individuals with communication disorders or neurodivergence, offers timely mental health support, and ultimately helps bridge the emotional disconnect often associated with digital interfaces. However, this evolution brings complex ethical dilemmas. Emotionally intelligent AI relies on sensitive data — including biometric and psychological profiles — raising serious privacy concerns about who owns that data, how it is stored, and the potential for misuse. There’s also the troubling possibility of emotional manipulation, where companies or political actors could exploit AI’s understanding of emotions to influence consumer behavior or public opinion subtly and unethically. Furthermore, emotionally intelligent AI still struggles with cultural bias: facial expression recognition systems, for instance, are often trained on Western datasets, leading to misinterpretations of emotions in people from other ethnic or cultural backgrounds. Moreover, as machines become more emotionally “responsive,” some experts worry about humans forming attachments to non-sentient beings, potentially reducing genuine human contact and increasing societal isolation, especially among the vulnerable, such as the elderly and children. There’s also the psychological risk of overtrusting machines that simulate empathy but don’t genuinely experience emotion. While AI can mimic caring behavior with impressive accuracy, it lacks the moral reasoning and lived experience that give true empathy its depth and nuance. Thus, emotionally intelligent AI might offer comfort, but it cannot replace human understanding — at least, not yet. Despite these limitations, 2025 is undoubtedly a turning point. We’ve moved from concept to deployment. What was once experimental is now being embedded into mainstream platforms by tech giants like Google, Microsoft, and Amazon. Innovations in GPT-based language models and transformer architectures have made conversational AI remarkably fluent, while companies like Affectiva, Soul Machines, and Replika are pushing the boundaries of digital empathy. Governments are also taking note, with regulatory frameworks in Europe and Asia beginning to address the ethical management of emotion-based AI, emphasizing transparency, consent, and data governance. What makes this year different is the confluence of technological readiness, commercial demand, and social acceptance — people now expect machines not just to work but to understand. Emotional responsiveness is becoming a default expectation, not a novelty. As a result, emotionally intelligent machines are shifting from niche applications to being embedded in everyday life. Yet, despite these breakthroughs, we must acknowledge that current humane AI is still limited in its scope. It recognizes emotional signals and responds accordingly, but it does not understand them with the consciousness or moral grounding that humans do. Its "empathy" is performative — effective, perhaps even therapeutic, but not real in the human sense. Still, that performative empathy, if well-calibrated and ethically used, is proving immensely valuable in contexts where real human support is scarce or unavailable. So while 2025 may not mark the final arrival of emotionally intelligent AI, it will likely be remembered as the year that emotional understanding became a core capability — not just a supplementary feature — in the development of artificial intelligence.

As artificial intelligence rapidly evolves in 2025, a remarkable transformation is underway—machines are no longer limited to logic and computation, but are beginning to demonstrate emotional intelligence, giving rise to what is now termed “humane AI.” This new wave of AI is designed not just to perform tasks but to understand, interpret, and appropriately respond to human emotions, signaling a shift from merely intelligent to emotionally aware machines. Humane AI draws its foundation from affective computing, a field pioneered by MIT Media Lab, which allows machines to recognize emotional states through data sources like facial expressions, voice tone, gesture analysis, posture, eye movement, and physiological signals such as heart rate or galvanic skin response. Combining this with advancements in natural language processing (NLP), computer vision, multimodal learning, and reinforcement learning with human feedback (RLHF), AI systems can now simulate empathy by adapting responses to match the emotional context of users. For example, a virtual therapist might detect sadness in a user’s tone and respond gently, offering supportive language, or a customer support chatbot might identify frustration and modify its tone to become more conciliatory and helpful. In 2025, emotionally intelligent AI is no longer speculative; it is actively reshaping industries like healthcare, education, eldercare, retail, and mental health support. AI chatbots like Woebot and Wysa use cognitive behavioral therapy techniques to offer comforting interactions tailored to the user’s emotional state, while in hospitals, AI monitors analyze patient expressions and vitals to alert nurses about signs of discomfort or anxiety. Elderly individuals benefit from companion robots that recognize loneliness or agitation and respond with humor, conversation, or alerts to caregivers, while emotionally adaptive educational platforms adjust teaching style, difficulty level, and pacing based on students’ facial expressions and engagement levels. In retail, customer experience is enhanced through AI systems that track user reactions in real-time and adjust product suggestions or marketing messages accordingly. Emotion-aware AI is also making its way into hiring practices, analyzing candidates’ micro-expressions and tone during interviews to assess confidence, sincerity, and cultural fit. These developments offer significant benefits: increased personalization, improved communication, better mental health accessibility, and stronger human-computer collaboration. However, they also bring forth profound ethical dilemmas, starting with the issue of privacy. Emotionally intelligent machines require large volumes of personal and often biometric data to function effectively, raising concerns over how this data is collected, stored, and used. Who owns our emotional data? Can it be sold, leaked, or exploited for profit? Another concern is emotional manipulation—companies could use AI to exploit users’ moods and vulnerabilities, nudging them toward purchases or decisions without full awareness. Furthermore, these systems often inherit biases present in their training data; AI trained on limited or culturally specific emotional expressions may misinterpret users from diverse backgrounds, leading to harmful or offensive misunderstandings. The illusion of empathy also poses challenges—while machines can mimic emotional understanding, they do not truly "feel," and yet humans may still form deep emotional attachments to them, which can result in social isolation or unrealistic expectations. Over-reliance on AI for companionship or therapy could displace genuine human relationships, particularly among vulnerable groups like children, the elderly, or those with mental health challenges. Meanwhile, constant exposure to emotionally responsive AI could desensitize people to authentic emotion, skewing social development. There is also the risk of overtrust: if users assume emotionally intelligent AI to be objective or moral, they may defer to its suggestions even when those suggestions are flawed, biased, or inappropriate. Despite these concerns, the momentum is undeniable—2025 is the year humane AI is transitioning from experimental technology to daily utility, as the integration of emotional intelligence into mainstream platforms becomes a key competitive advantage. Big tech companies like Google, Microsoft, Meta, and Amazon are already investing in emotion-sensing devices and software; startups like Affectiva, Soul Machines, and Replika are pushing the envelope by developing avatars and chatbots capable of real-time emotional interaction. Governments and ethics boards are scrambling to keep up, proposing frameworks around emotional data transparency, AI accountability, cultural bias mitigation, and digital consent. Meanwhile, public opinion remains cautiously optimistic—users appreciate AI that listens, adapts, and “cares,” even if superficially. As our homes, cars, phones, and workplaces become infused with emotionally attuned machines, the human-machine relationship is evolving from transactional to relational. Though AI still lacks true consciousness or moral intuition, its ability to perceive and respond to emotions makes it seem more human, and for many, that’s enough to improve quality of life, especially in sectors with limited human support. Whether this development ultimately deepens or diminishes human empathy remains to be seen, but there is no doubt that emotionally intelligent AI will redefine digital interaction. Thus, while 2025 may not yet be the year when machines truly “understand” us in a human sense, it will be remembered as the year that emotional awareness became a defining feature of artificial intelligence systems—marking a historic shift toward a future where our devices don’t just work for us, but also relate to us.

Conclusion

Humane AI—the convergence of emotional intelligence and machine learning—represents the next paradigm shift in artificial intelligence. As AI systems grow in their ability to sense, interpret, and respond to human emotions, they become more integrated into our social, emotional, and ethical fabric. From healthcare to education and customer service to companionship, emotionally intelligent machines are poised to enhance quality of life, increase empathy, and support human needs in novel ways.

However, this evolution must be handled with extreme caution. The power to understand emotions comes with the risk of manipulating them. Data security, bias, transparency, and human oversight must form the ethical backbone of humane AI.

Will 2025 be the year of emotionally intelligent machines?

Perhaps not fully—but it will certainly be remembered as the year we crossed the threshold. We are no longer asking if machines can understand us; we are learning how to make them care, responsibly.

Q&A Section

Q1 :- What is humane AI?

Ans:- Humane AI refers to artificial intelligence systems designed with emotional intelligence, ethical principles, and human-centric values. These systems can recognize and respond to human emotions in a socially appropriate way.

Q2 :- How do machines recognize emotions?

Ans:- Machines use affective computing techniques such as facial recognition, voice analysis, eye tracking, and physiological sensors. These inputs are analyzed using machine learning models to interpret emotions.

Q3 :- Where is emotionally intelligent AI used in 2025?

Ans:- Applications include mental health support bots, empathetic customer service agents, eldercare robots, adaptive educational tutors, and emotion-aware recruitment tools.

Q4 :- What are the benefits of emotionally intelligent AI?

Ans:- Key benefits include enhanced trust, personalized support, better accessibility for differently-abled individuals, and the ability to intervene in emotional crises.

Q5 :- What are the ethical concerns surrounding humane AI?

Ans:- Concerns include emotional data privacy, potential manipulation, bias in emotion detection across cultures, loss of genuine human interaction, and overreliance on machines.

Similar Articles

Find more relatable content in similar Articles

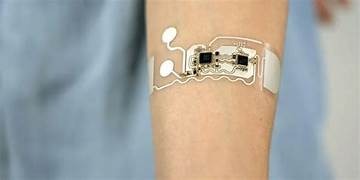

Wearable Health Sensors: The D..

Wearable health sensors are re.. Read More

Digital DNA: The Ethics of Gen..

Digital DNA—the digitization a.. Read More

Data Centers and the Planet: M..

As cloud computing becomes the.. Read More

Protecting Kids in the Digital..

In an increasingly connected w.. Read More

Explore Other Categories

Explore many different categories of articles ranging from Gadgets to Security

Smart Devices, Gear & Innovations

Discover in-depth reviews, hands-on experiences, and expert insights on the newest gadgets—from smartphones to smartwatches, headphones, wearables, and everything in between. Stay ahead with the latest in tech gear

Apps That Power Your World

Explore essential mobile and desktop applications across all platforms. From productivity boosters to creative tools, we cover updates, recommendations, and how-tos to make your digital life easier and more efficient.

Tomorrow's Technology, Today's Insights

Dive into the world of emerging technologies, AI breakthroughs, space tech, robotics, and innovations shaping the future. Stay informed on what's next in the evolution of science and technology.

Protecting You in a Digital Age

Learn how to secure your data, protect your privacy, and understand the latest in online threats. We break down complex cybersecurity topics into practical advice for everyday users and professionals alike.

© 2025 Copyrights by rTechnology. All Rights Reserved.